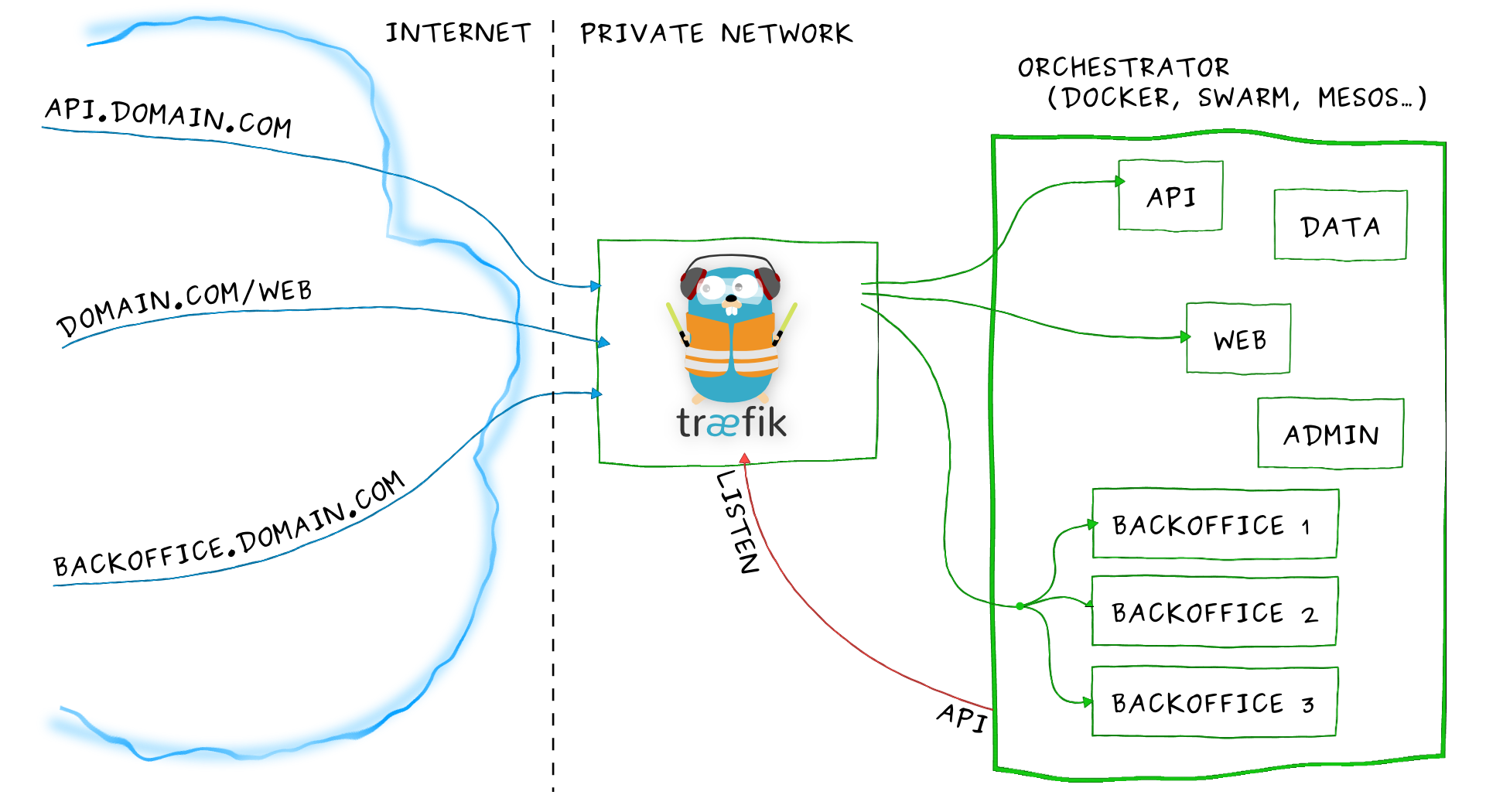

This post will show you how to setup a Swarm Cluster, deploy a couple of microservices, and create a Reverse Proxy Service (with Traefik) in charge of routing requests on their base URLs.

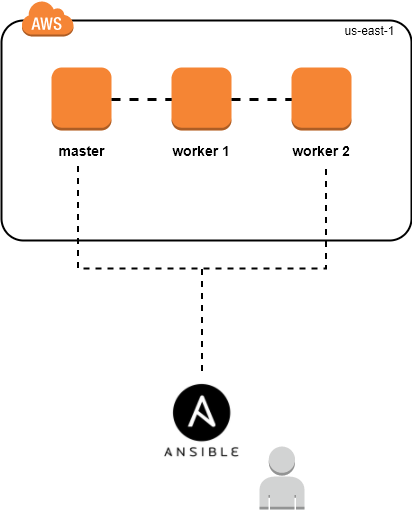

If you haven’t already, create a Swarm cluster, you could use the shell script below to setup a cluster with 3 nodes (1 Manager & 2 Workers)

1 | #!/bin/sh |

Issue the following command to execute the script:

1 | chmod +x setup.sh |

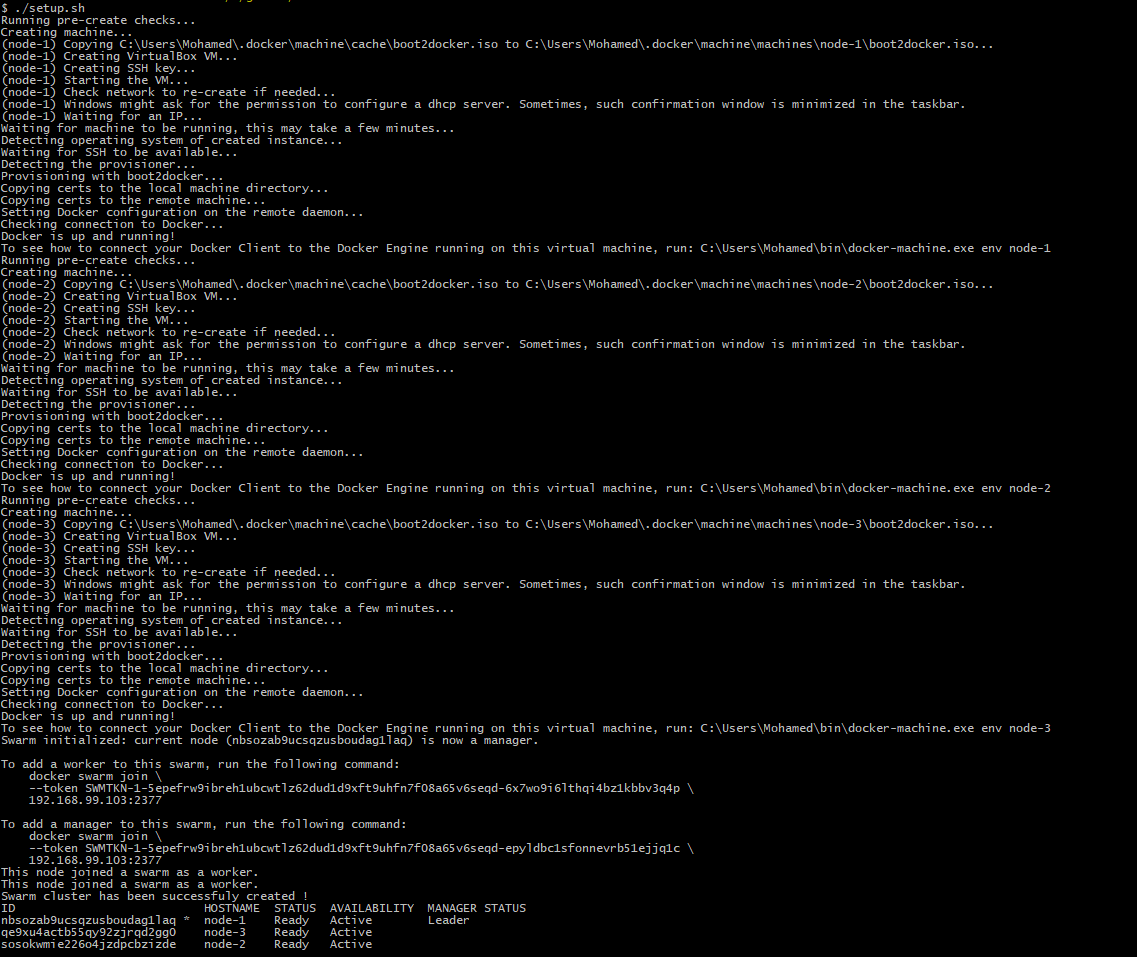

The output of the above command is as follows:

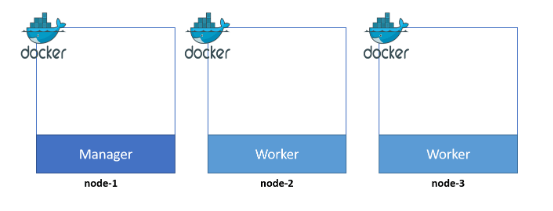

At this moment, we have 3 nodes:

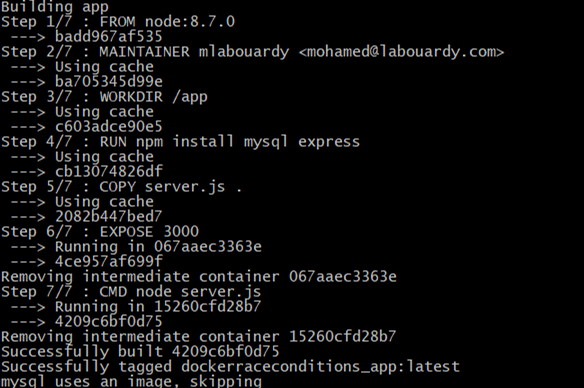

Our example microservice application consists of two parts. The Books API and the Movies API. For both parts I have prepared images for you that can be pulled from the DockerHub.

The Dockerfiles for both images can be found on my Github.

Create docker-compose.yml file with the following content:

1 | version: "3.3" |

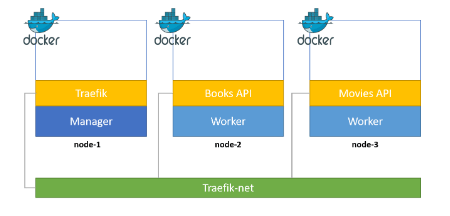

- We use an overlay network named traefik-net, on which we add the services we want to expose to Traefik.

- We use constraints to deploy the APIs on workers & Traefik on Swarm manager.

- Traefik container is configured to listen on port 80 for the standard HTTP traffic, but also exposes port 8080 for a web dashboard.

- The use of docker socket (/var/run/docker.sock) allows Traefik to listen to Docker Daemon events, and reconfigure itself when containers are started/stopped.

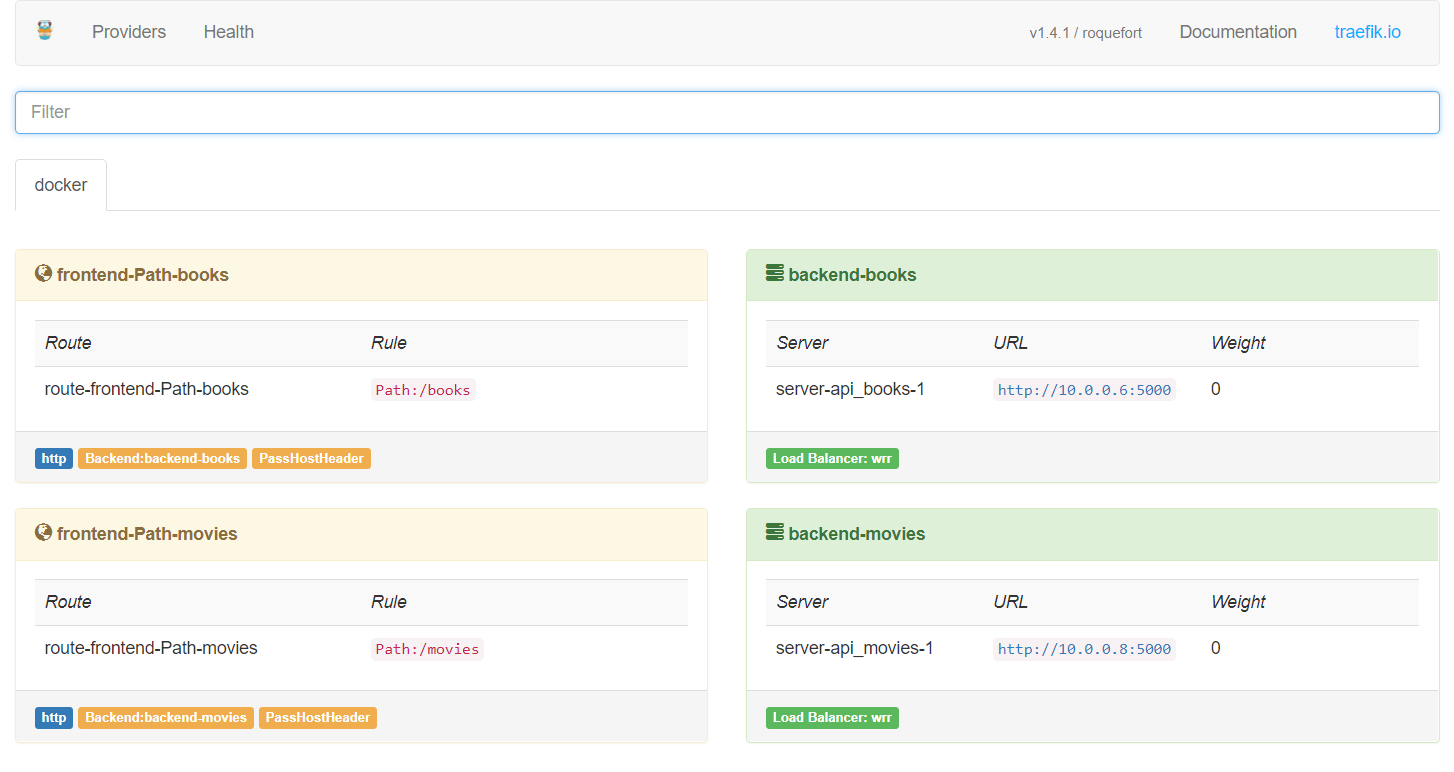

- The label traefik.frontend.rule is used by Træfik to determine which container to use for which Request Path.

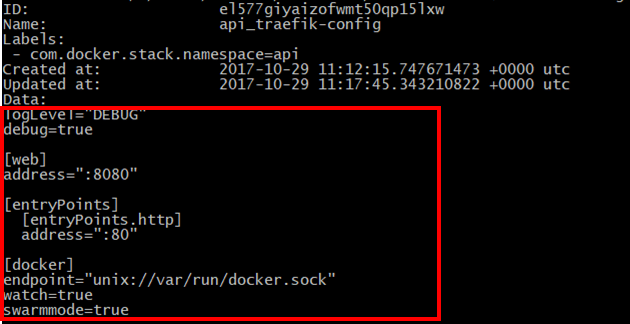

- The configs part create a configuration file for Traefik from config.toml (it enables the Docker backend)

1 | logLevel="DEBUG" |

In order to deploy our stack, we should execute the following command:

1 | docker stack deploy --compose-file docker-compose.yml api |

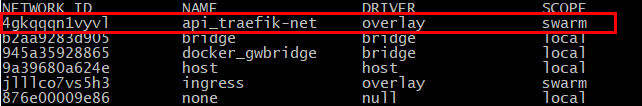

Let’s check the overlay network:

1 | docker network ls |

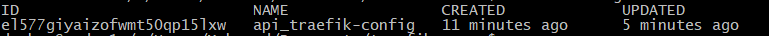

Traefik configuration:

1 | docker config ls |

To display the configuration content:

1 | docker config inspect api_traefik-config --pretty |

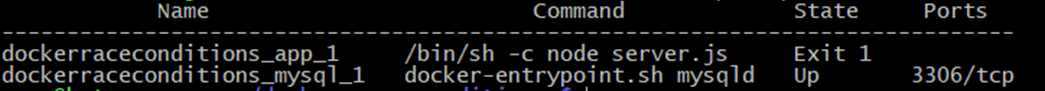

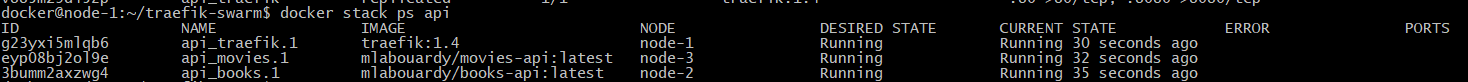

And finally, to list all the services:

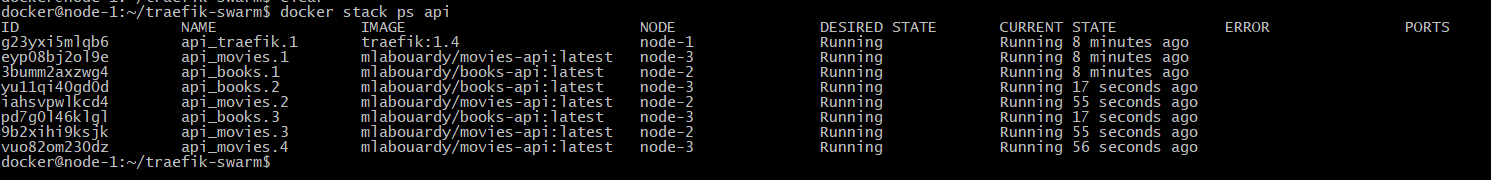

1 | docker stack ps api |

In the list of above, you can see that the 3 containers are being running on node-1, node-2 & node-3 :

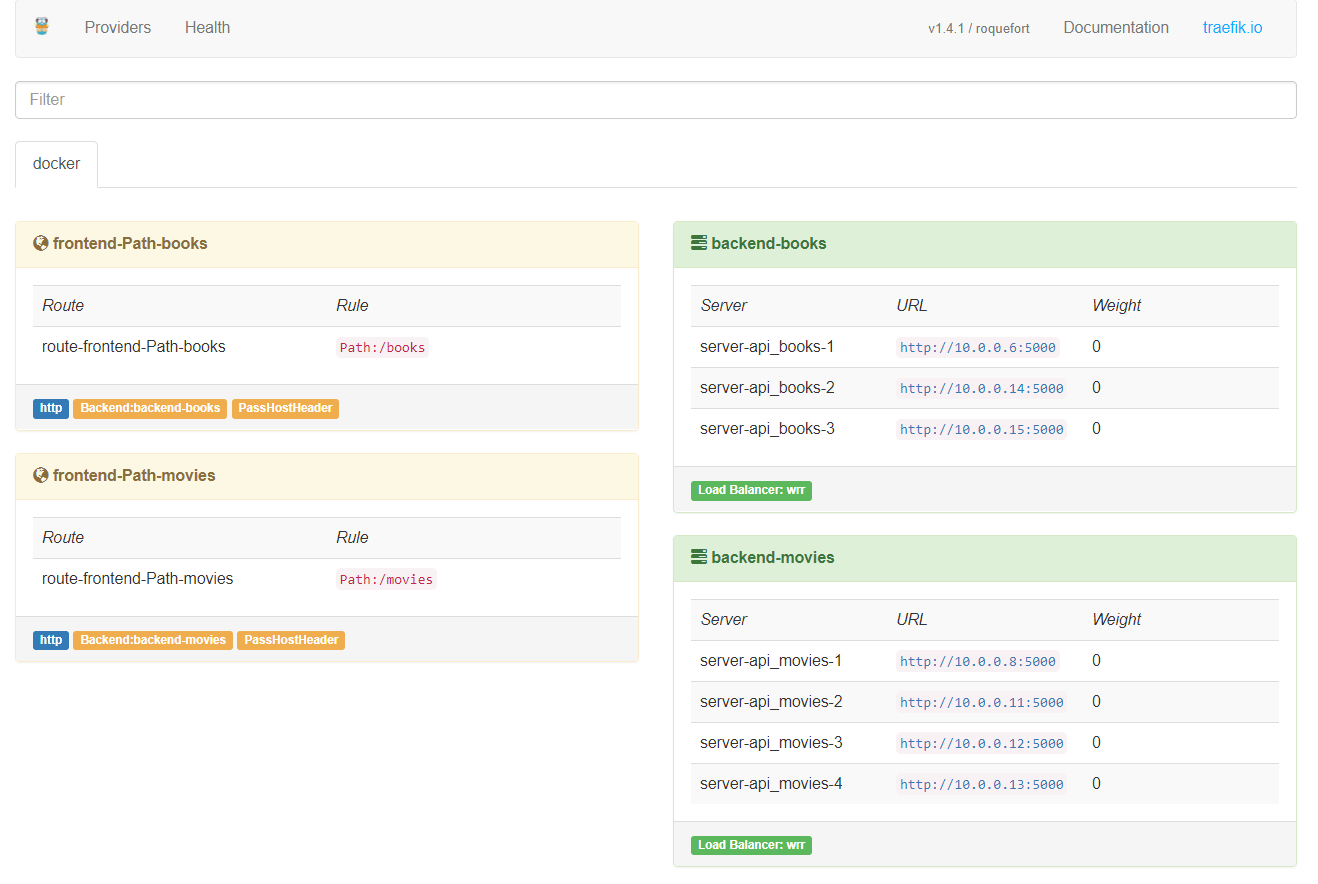

If you point your favorite browser (not you IE 😂) to the Traefik Dashboard URL (http://MANAGER_NODE_IP:8080) you should see that the frontends and backends are well defined:

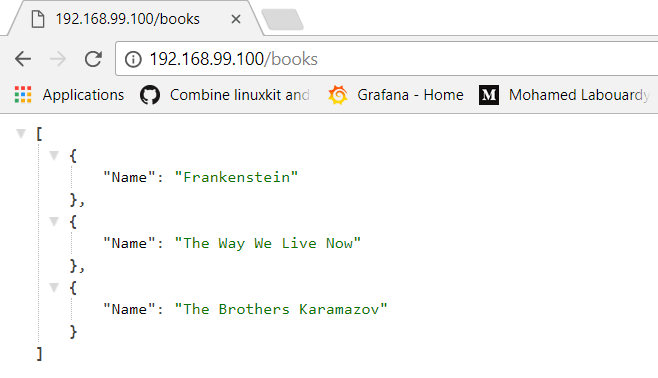

If you check http://MANAGER_NODE_IP/books, you will get a list of books

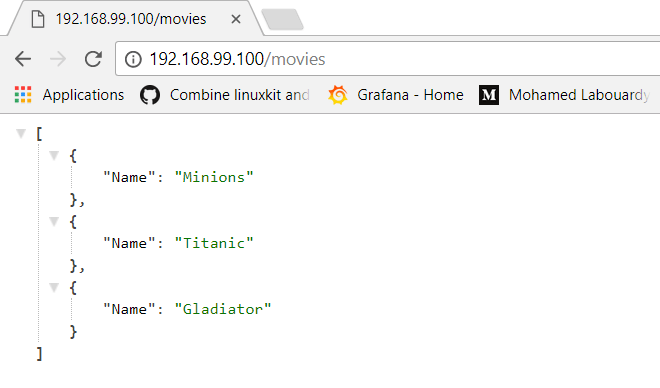

If you replace the base URL with /movies:

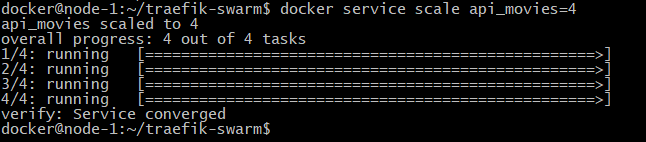

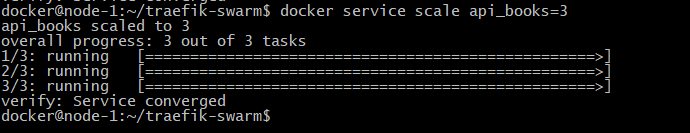

What happens if we want to scale out the books & movies APIs. With the docker service scale command:

We can confirm that:

Obviously Traefik did recognise that we started more containers and made them available to the right frontend automatically:

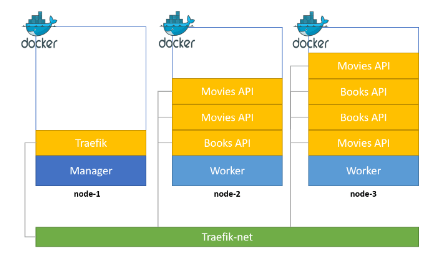

In the diagram below, you will find that the manager has decied to schedule the new containers on node-2 (3 of them) and node-3 (4 of them) using the Round Robin strategy

Drop your comments, feedback, or suggestions below — or connect with me directly on Twitter @mlabouardy.