It’s easy to get race conditions with Compose & Docker. Take for example, if you have a common pattern when you have the application server depends on the database, but since the database server didn’t have time to configure itself and application has already started it would just failed connecting for it.

A race condition example with NodeJS app & MySQL:

1 | var MySQL = require('mysql'), |

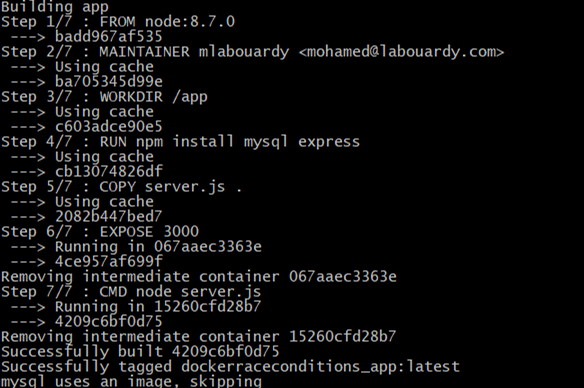

To build the application container, I used the following Dockerfile:

1 | FROM node:8.7.0 |

To deploy the stack, I used docker-compose:

1 | version: "3.0" |

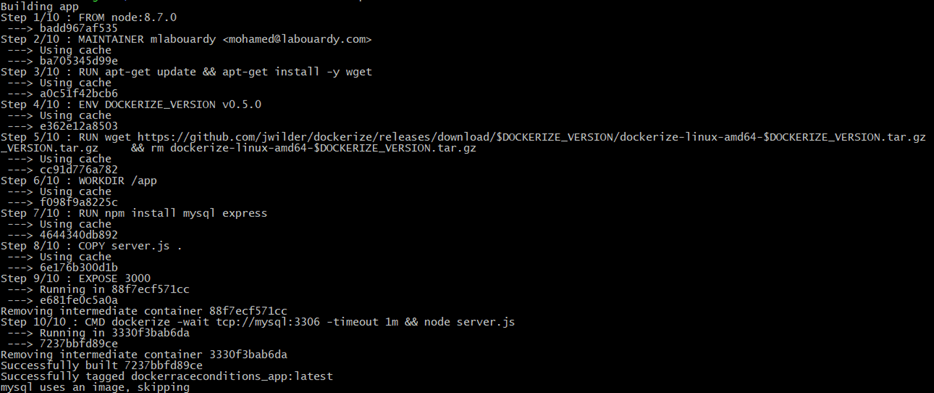

Let’s build the image:

1 | docker-compose build |

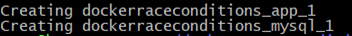

Then, create the containers:

1 | docker-compose up -d |

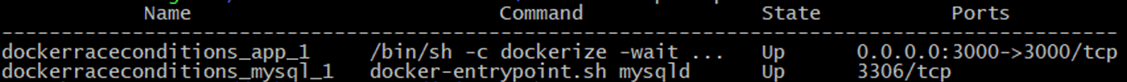

Let’s see the status:

1 | docker-compose ps |

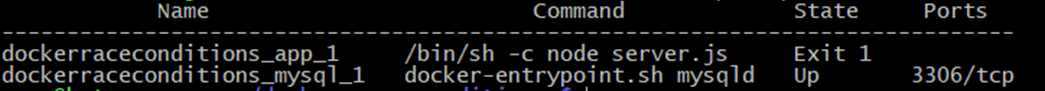

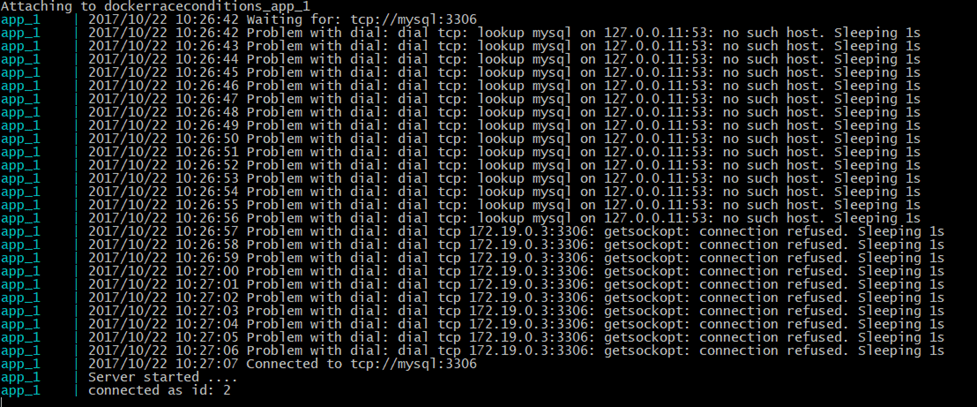

The application failed to start, lets see why ?

1 | docker-compose logs -f app |

RACE CONDITION ! The application container come up before the DB and tried to connect to MySQL database and fail with a database connection error. To avoid that, There are many solutions:

- Adding a mechanism in the code to wait for DB to be up and setup before starting to connect to it

- Using restart policy – Docker Docs

- Holding the container until the database is up and running

I will go with the 3rd solution, an open source tool called Dockerize, the advantage of this tool is that’s its pretty fast to just look over the opening the socket until it’s getting open and then launch the web app.

Note: Dockerize gives you the ability to wait for services on a specified protocol (file, tcp, tcp4, tcp6, http, https and unix)

So just update the Dockerfile to install Dockerize:

1 | FROM node:8.7.0 |

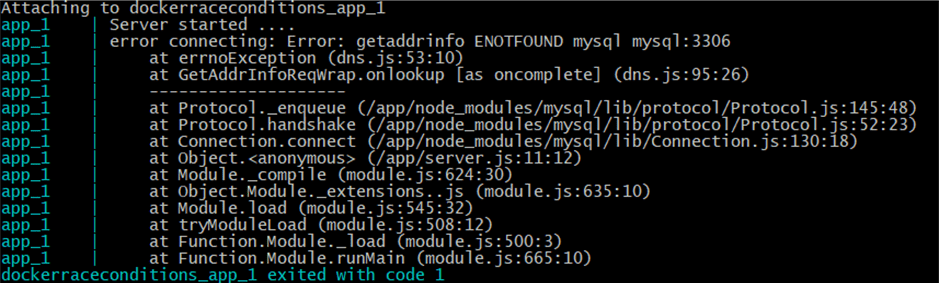

Then, build the new image:

1 | docker-compose up -d |

1 | docker-compose logs -f app |

Its working !

Drop your comments, feedback, or suggestions below — or connect with me directly on Twitter @mlabouardy.