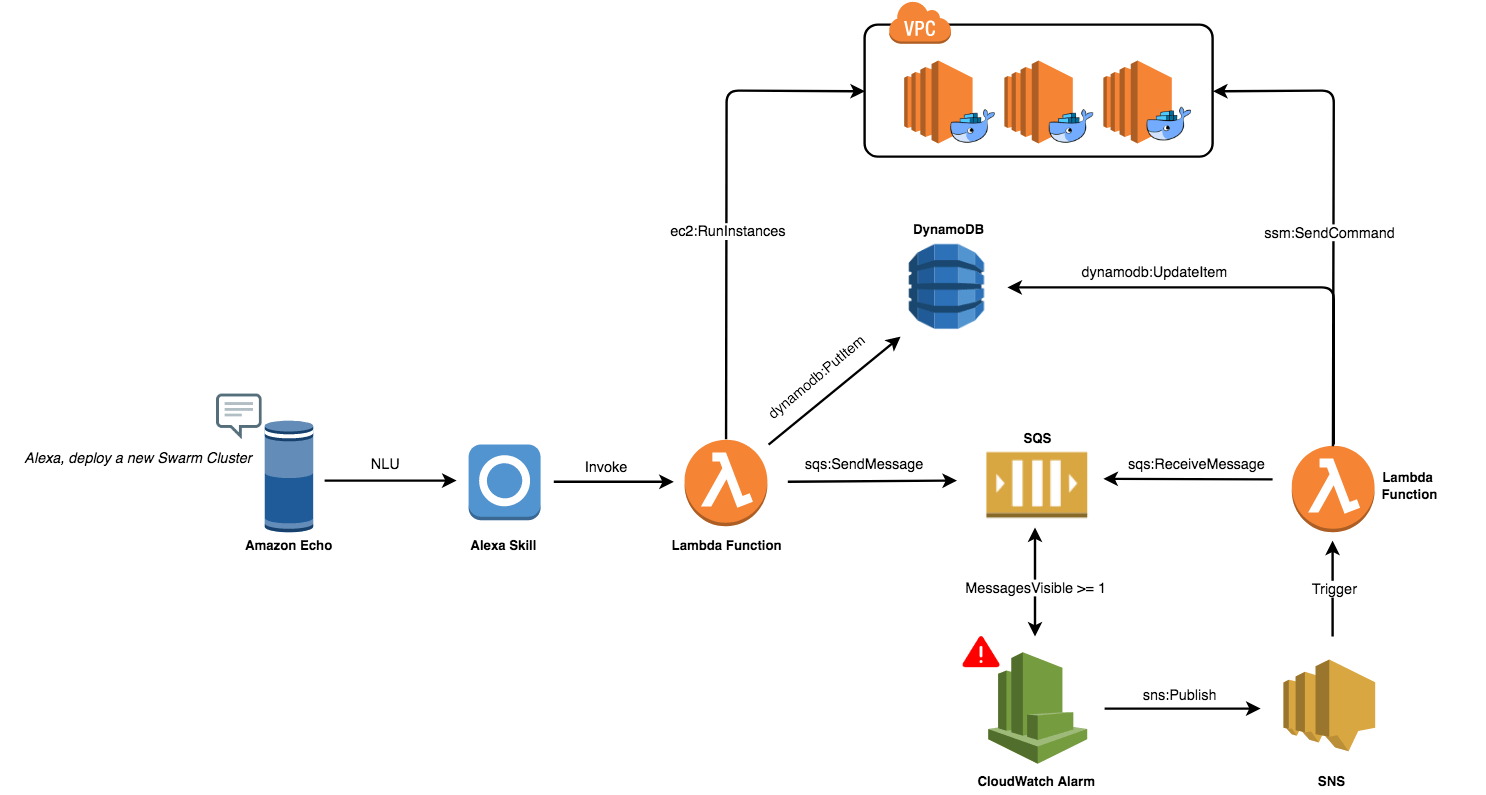

Serverless and Containers changed the way we leverage public clouds and how we write, deploy and maintain applications. A great way to combine the two paradigms is to build a voice assistant with Alexa based on Lambda functions – written in Go – to deploy a Docker Swarm cluster on AWS.

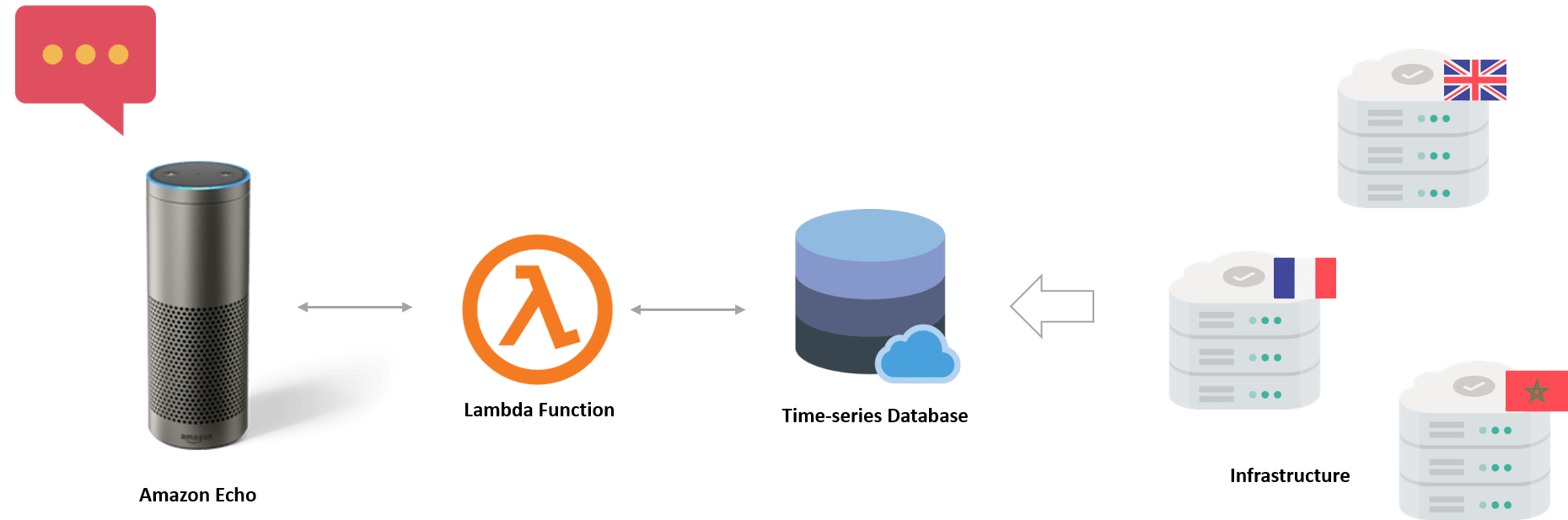

The figure below shows all components needed to deploy a production-ready Swarm cluster on AWS with Alexa.

Note: Full code is available on my GitHub.

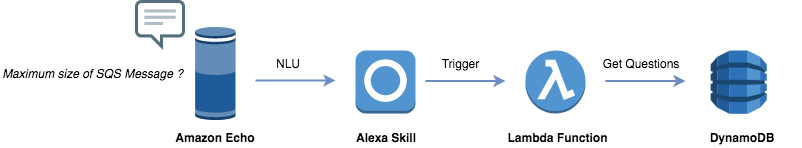

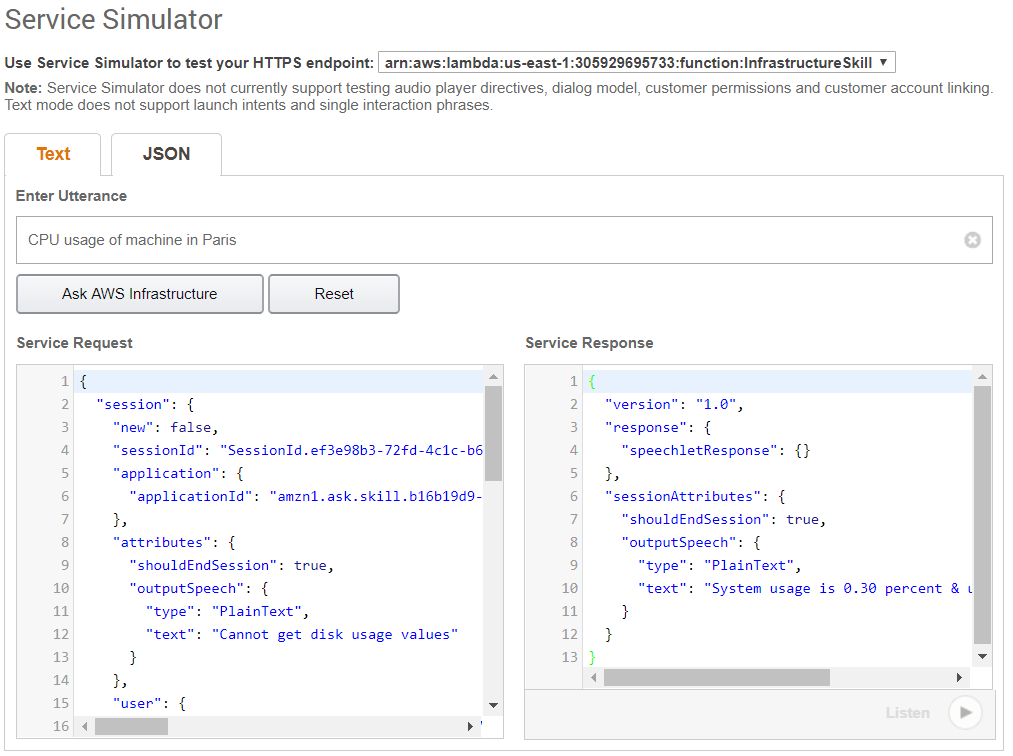

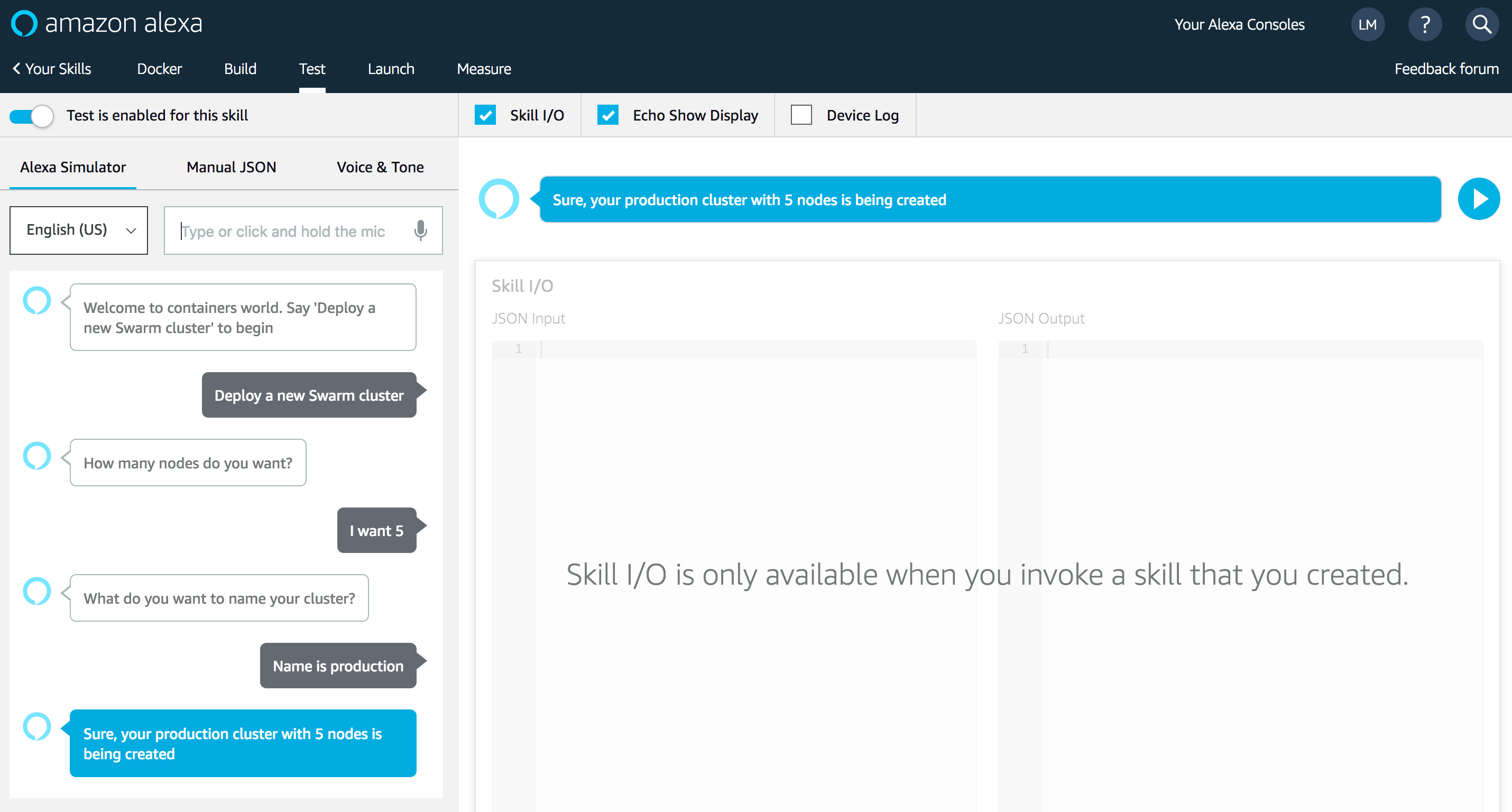

A user will ask Amazon Echo to deploy a Swarm Cluster:

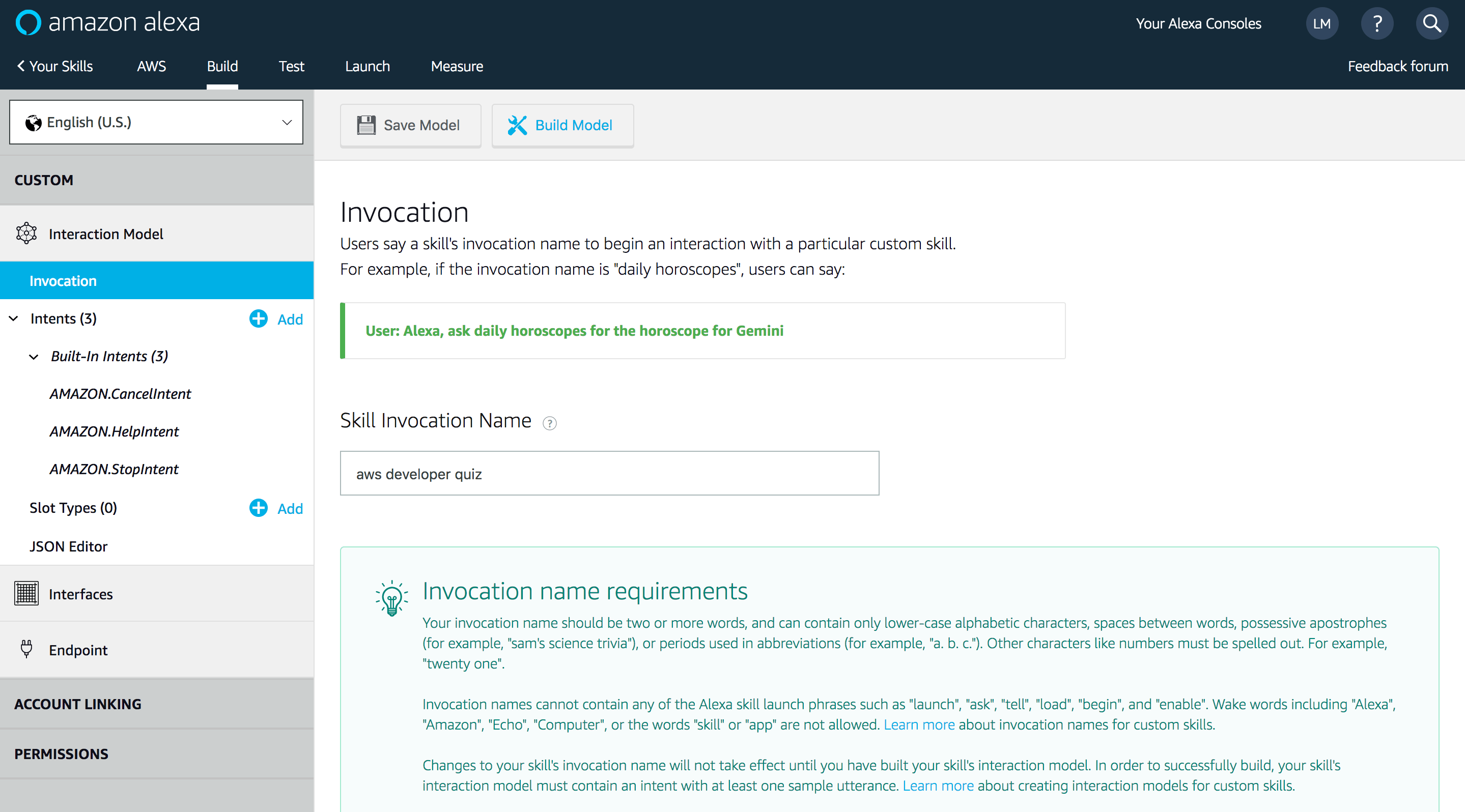

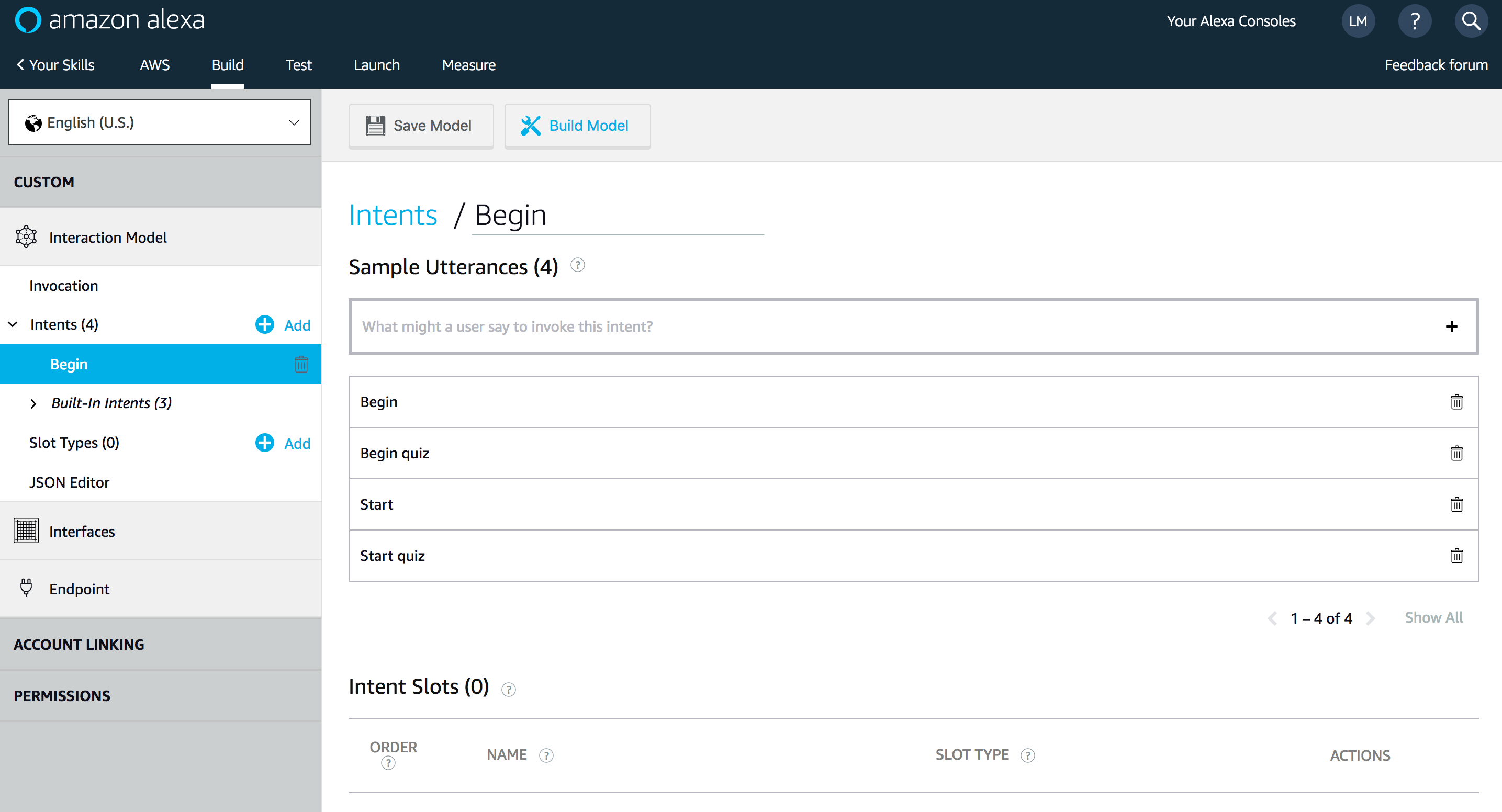

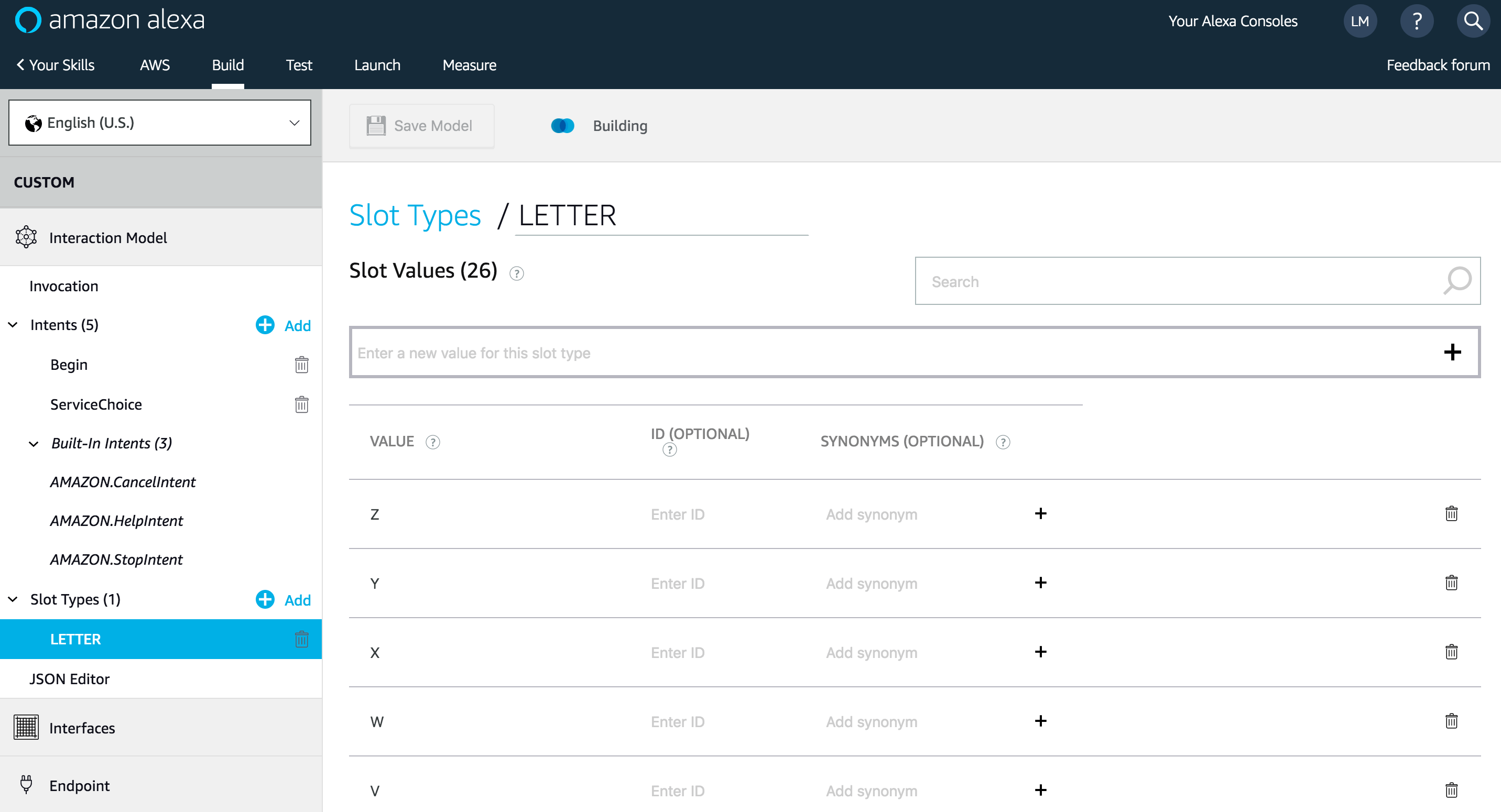

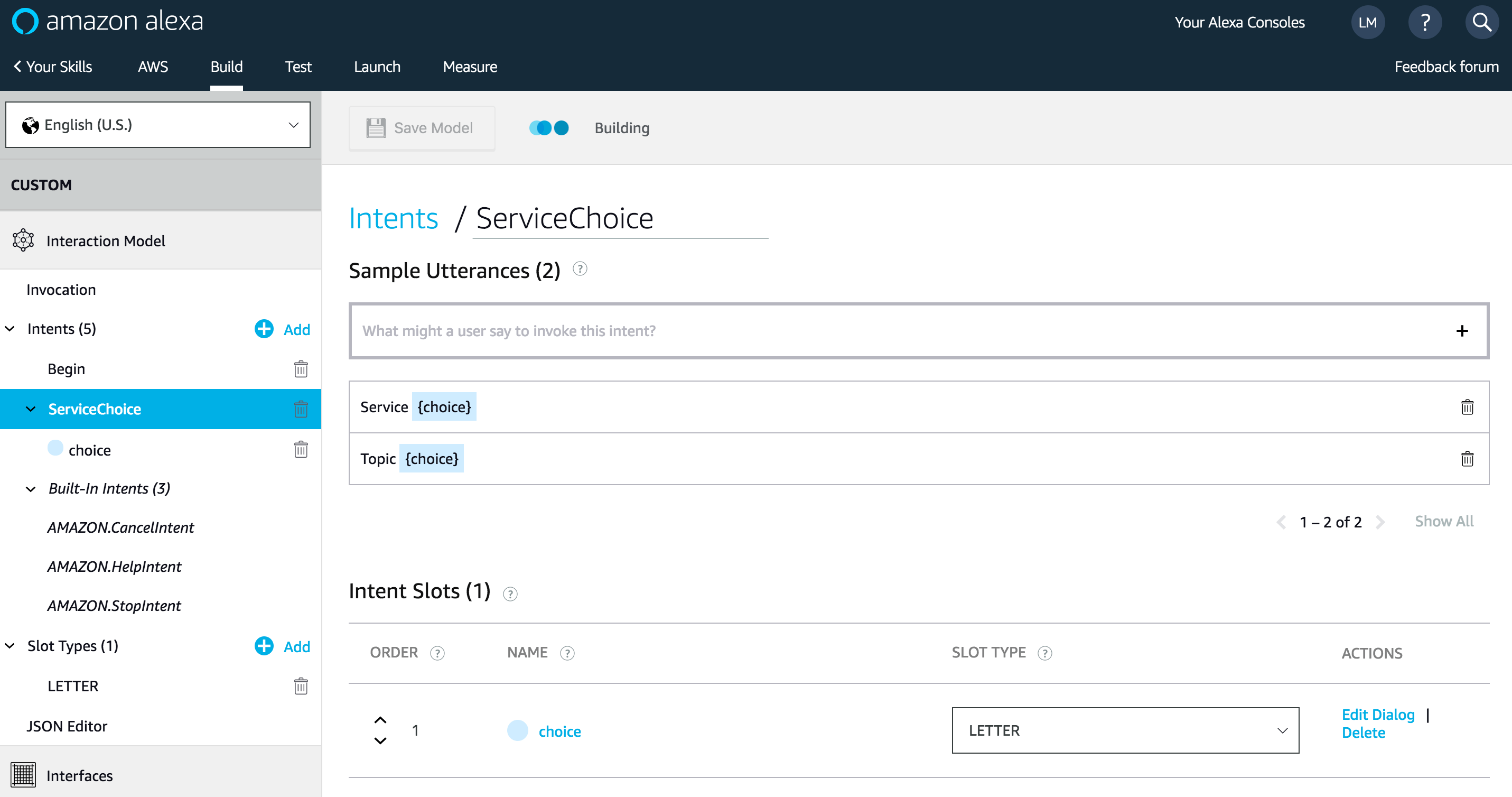

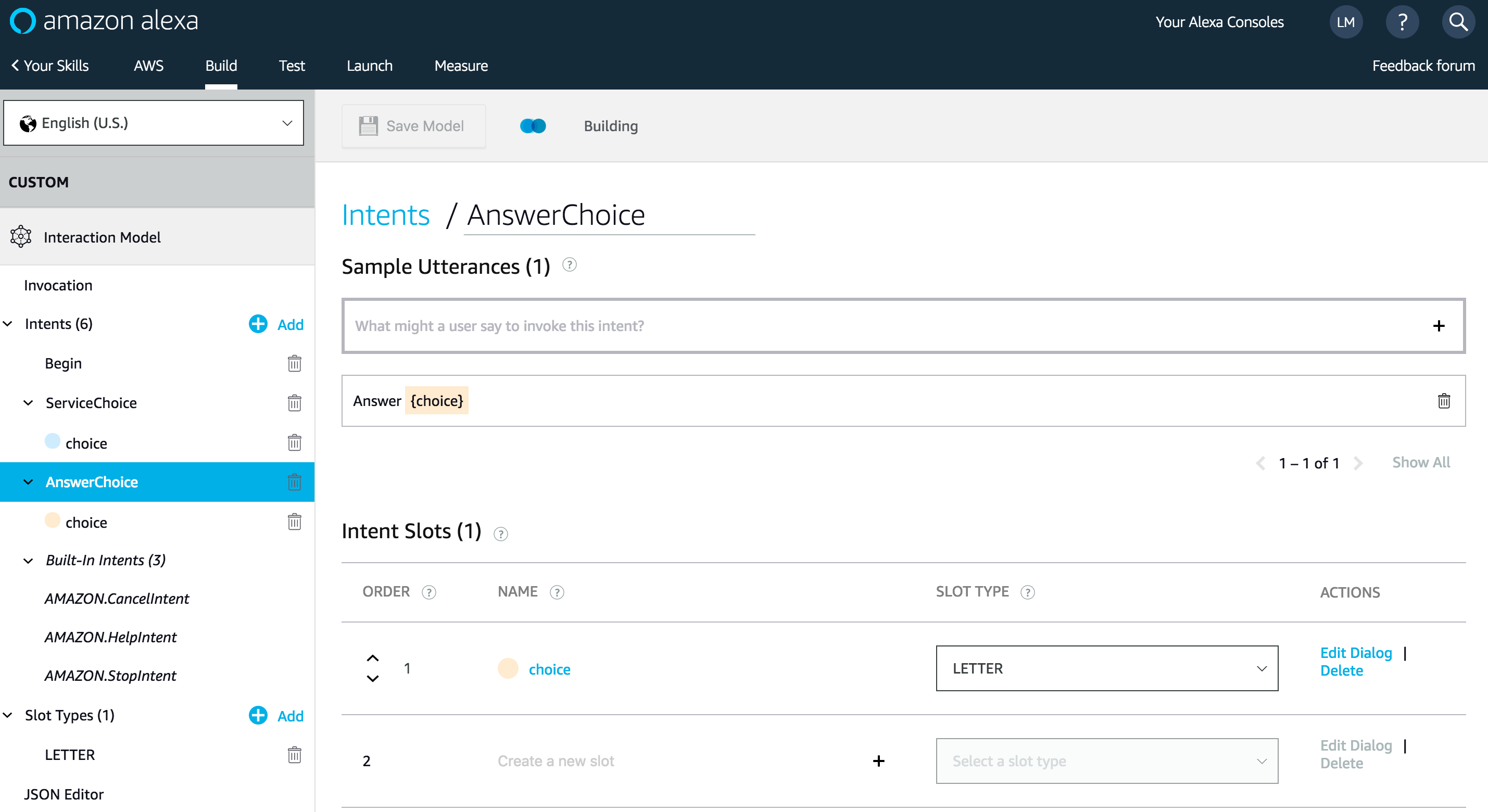

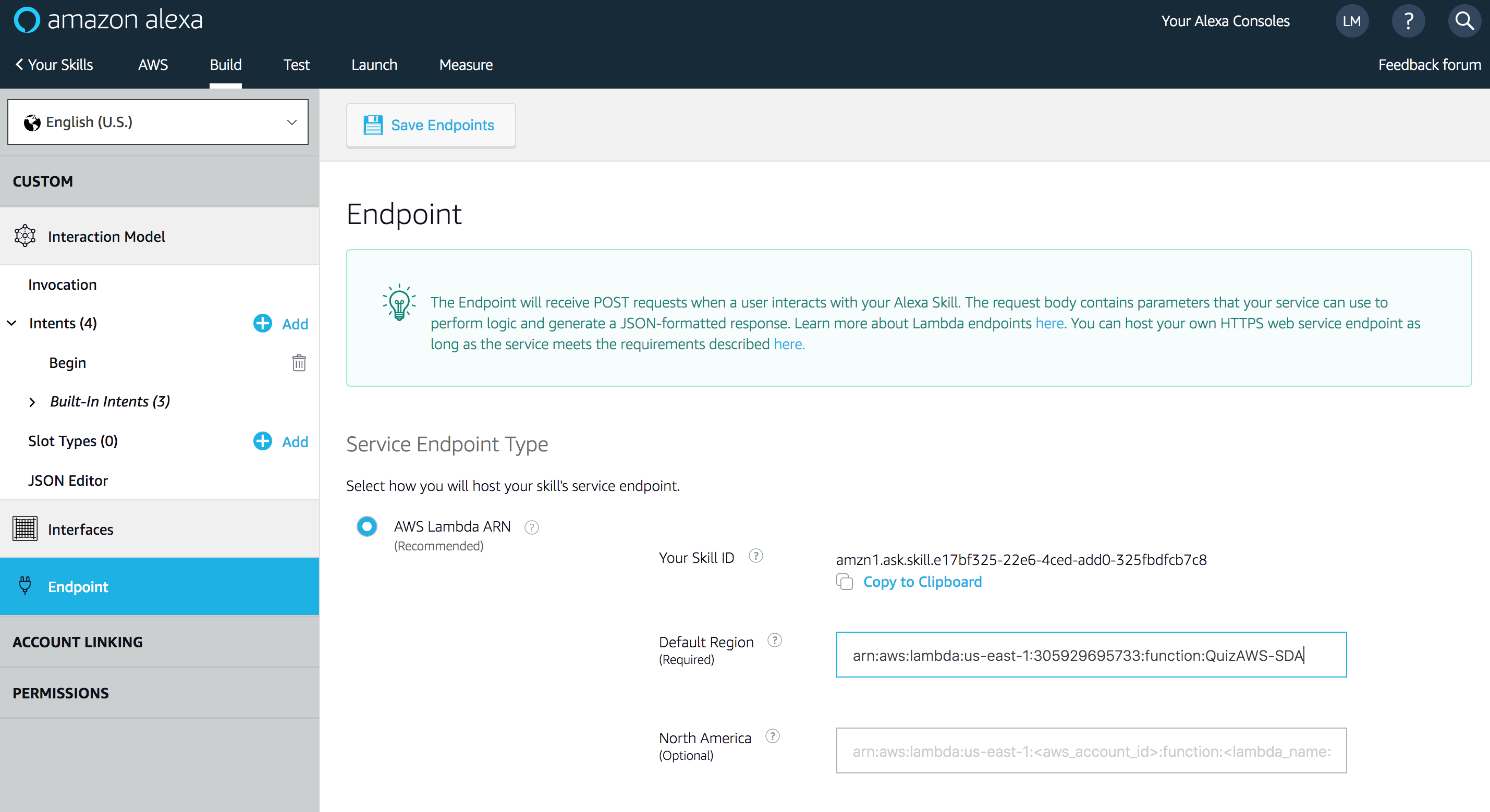

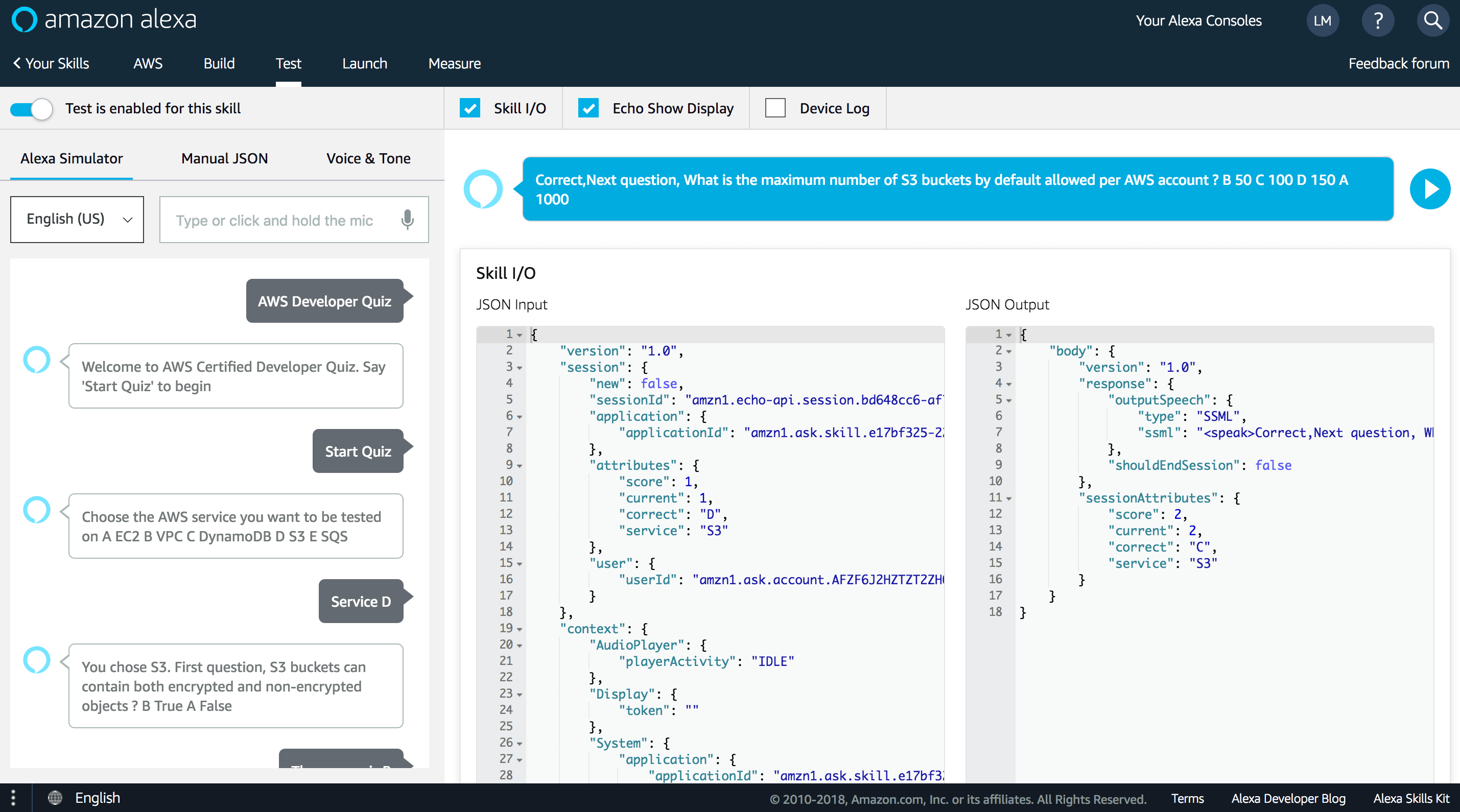

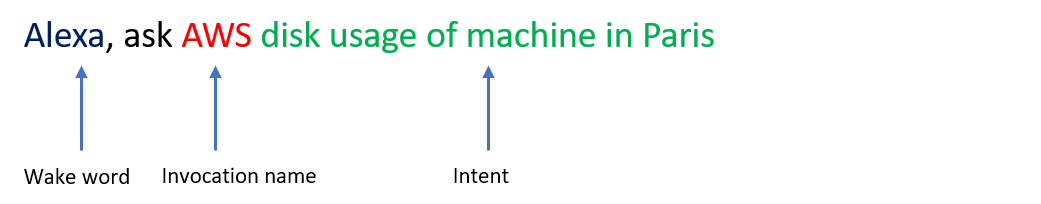

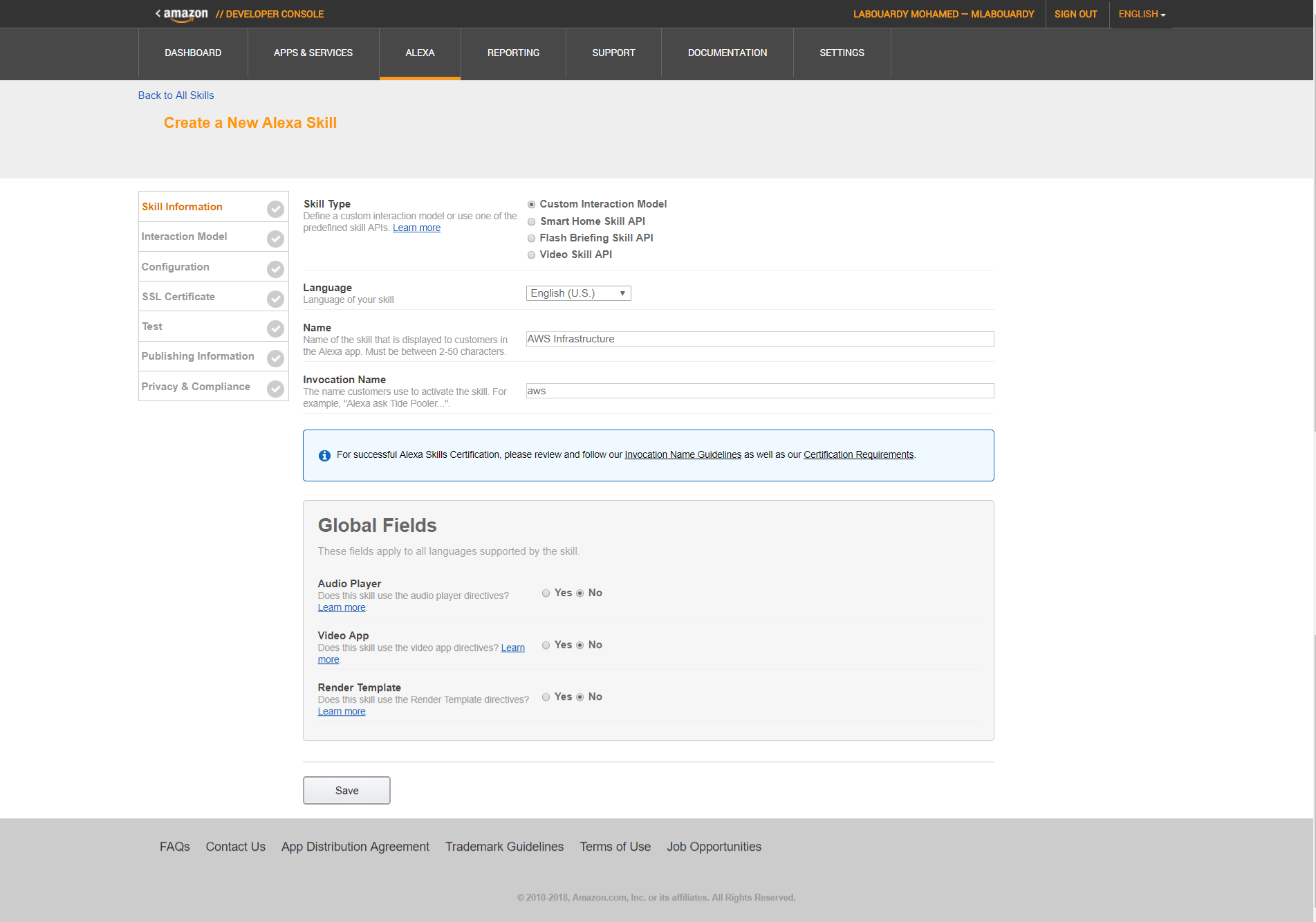

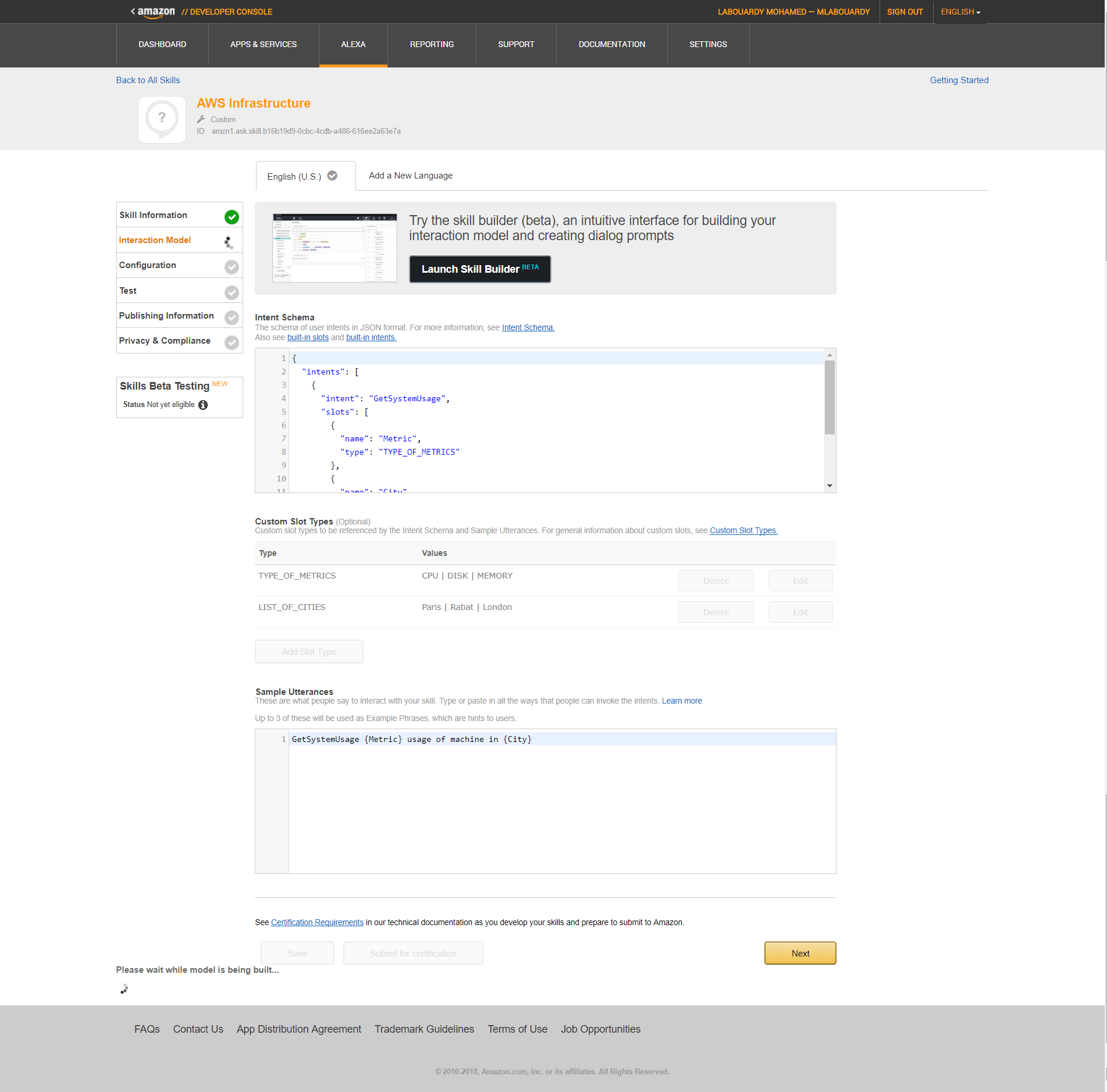

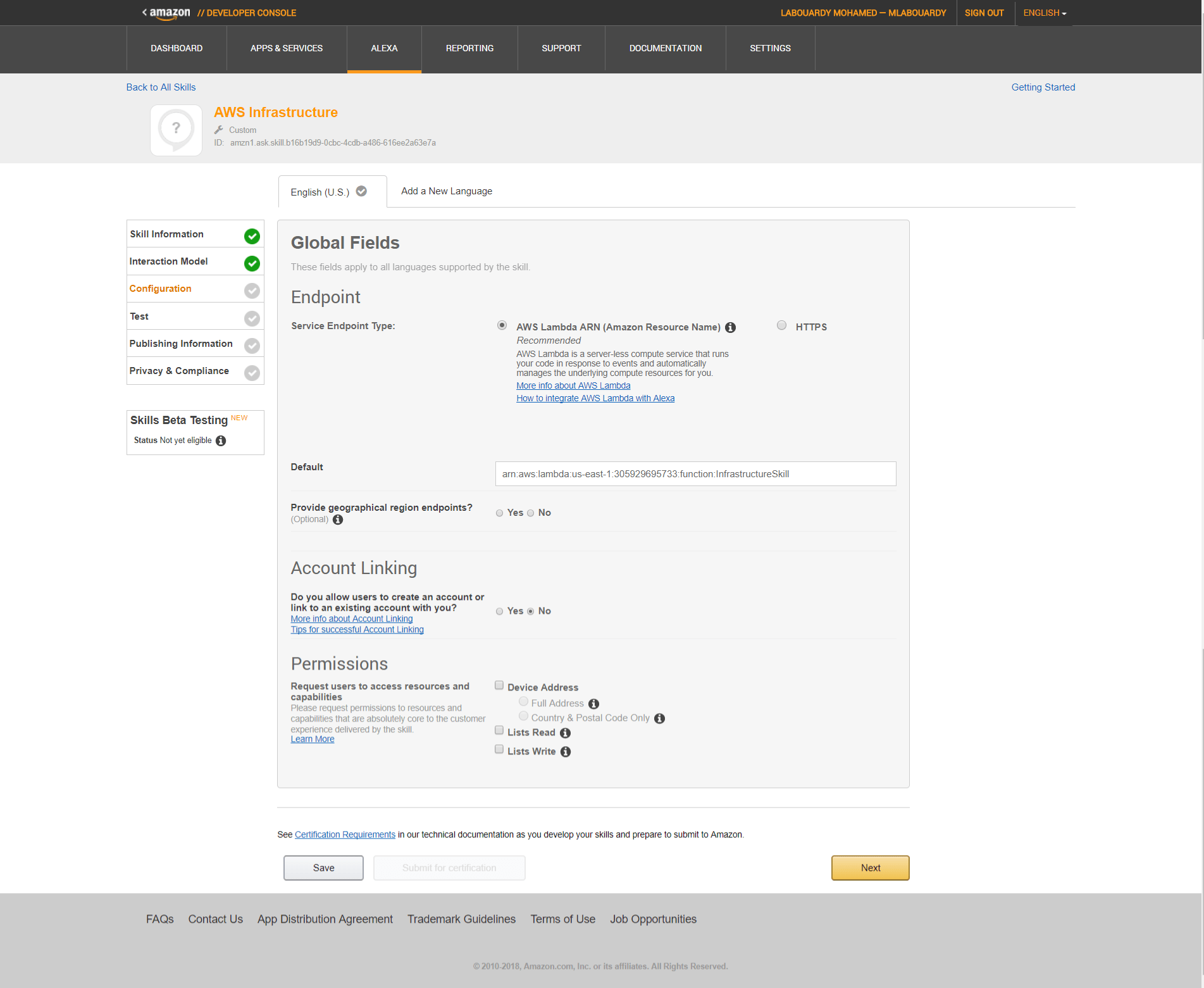

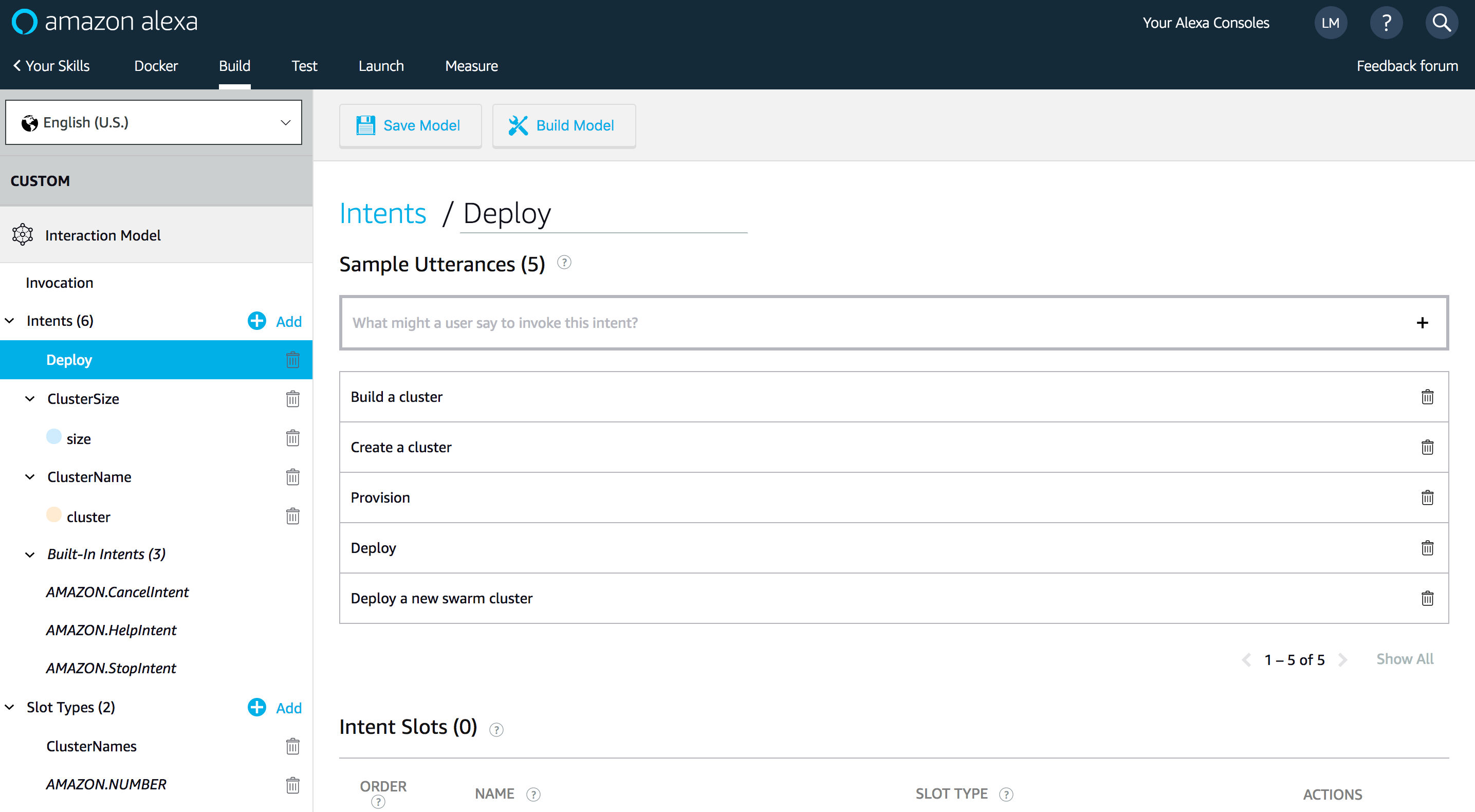

Echo will intercept the user’s voice command with built-in natural language understanding and speech recognition. Convey them to the Alexa service. A custom Alexa skill will convert the voice commands to intents:

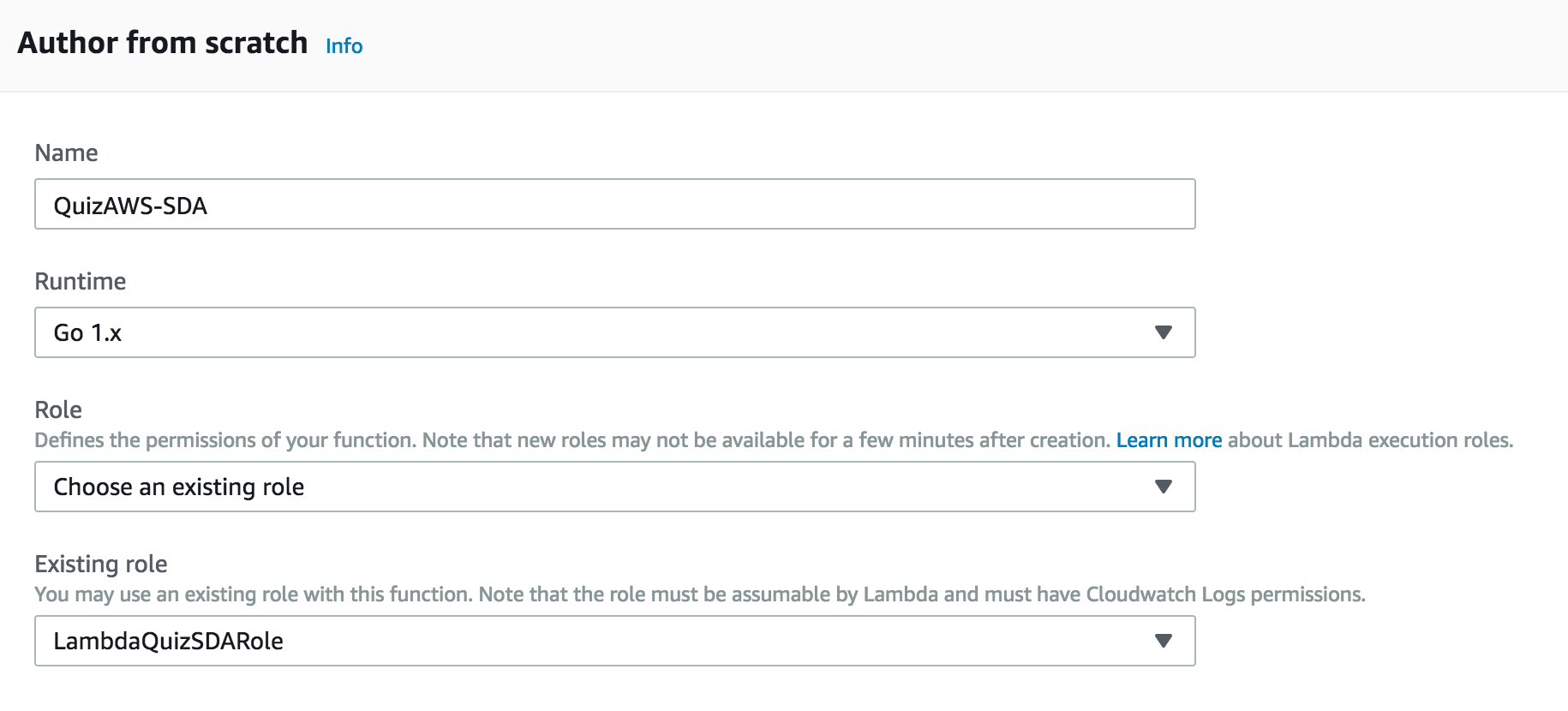

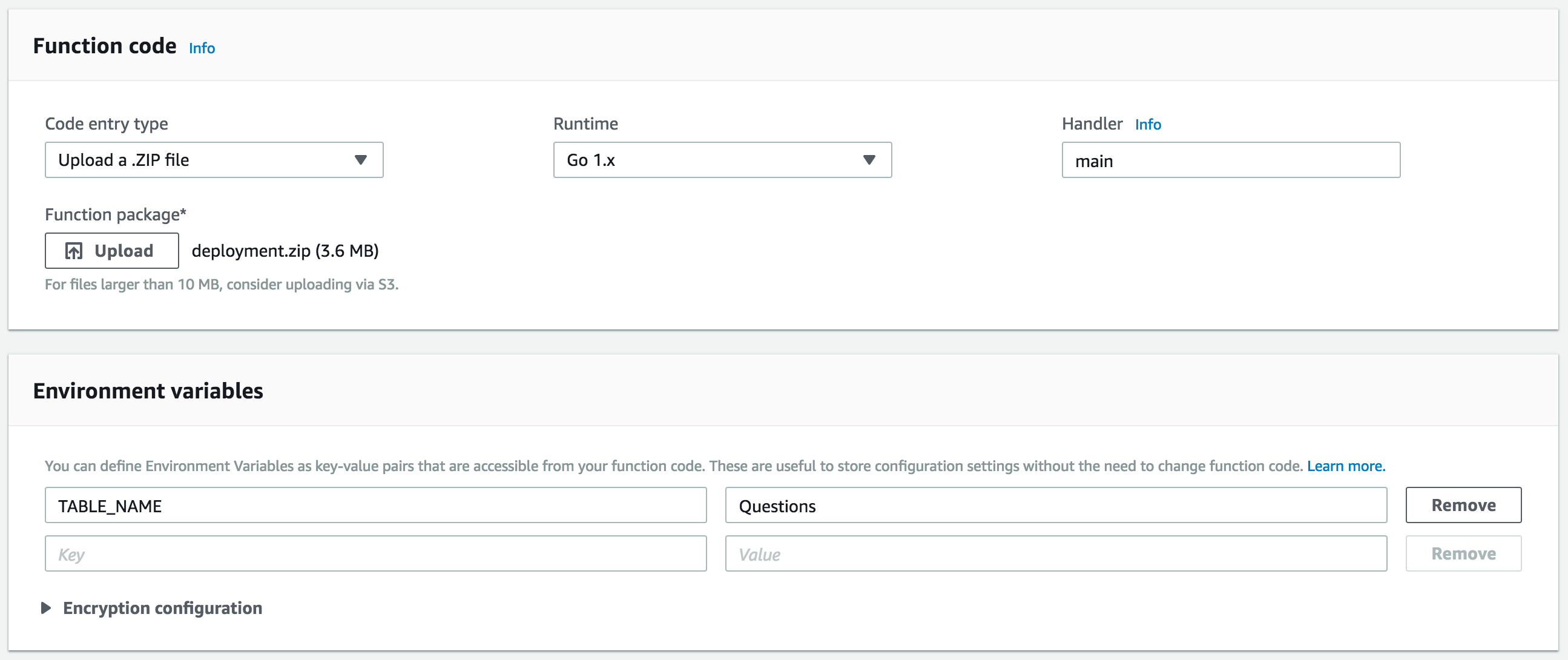

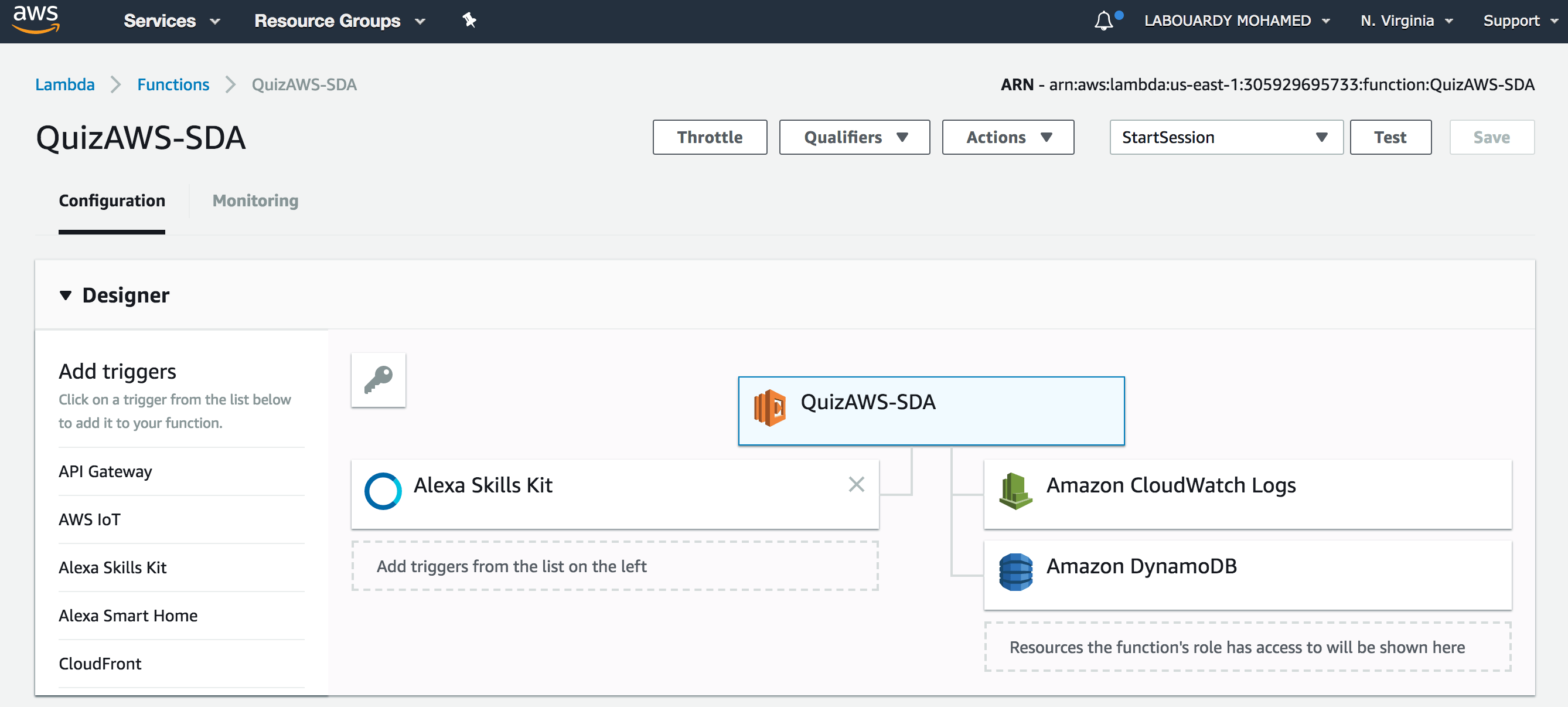

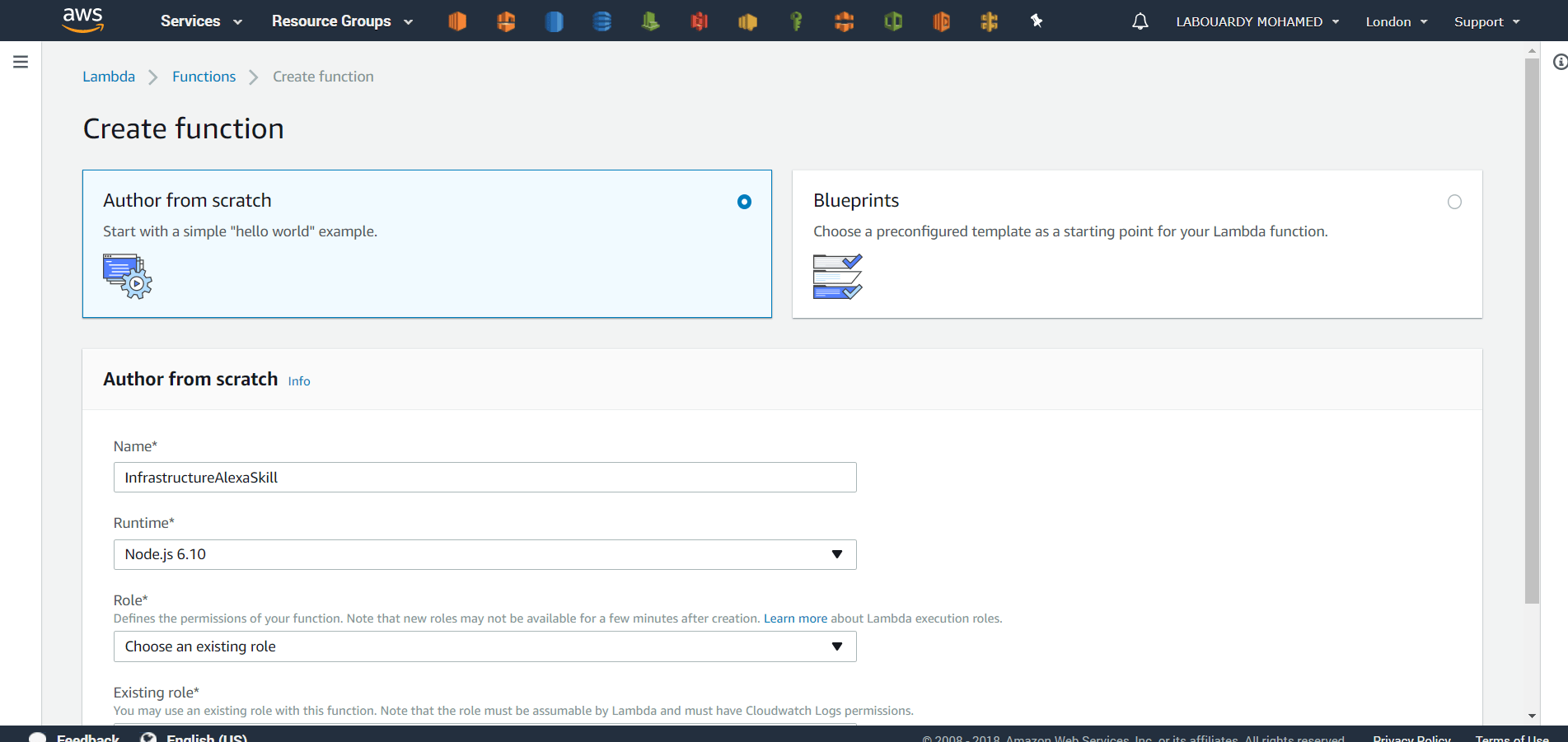

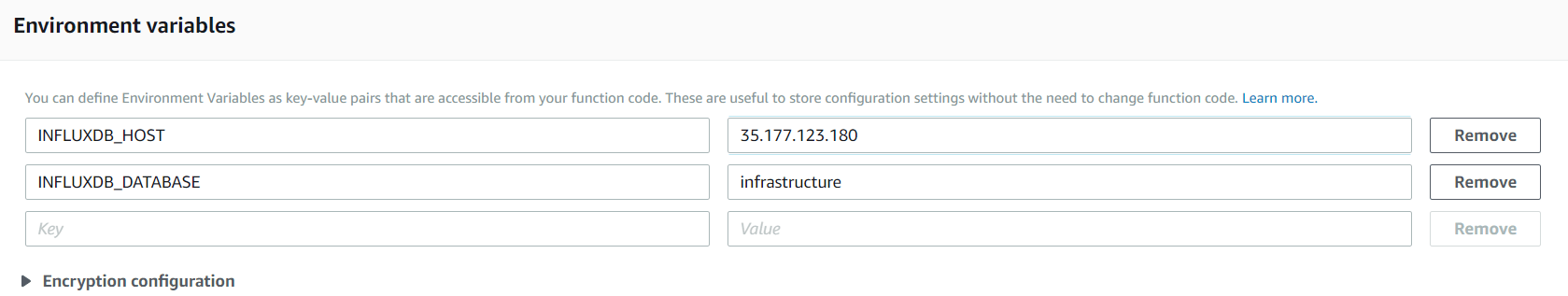

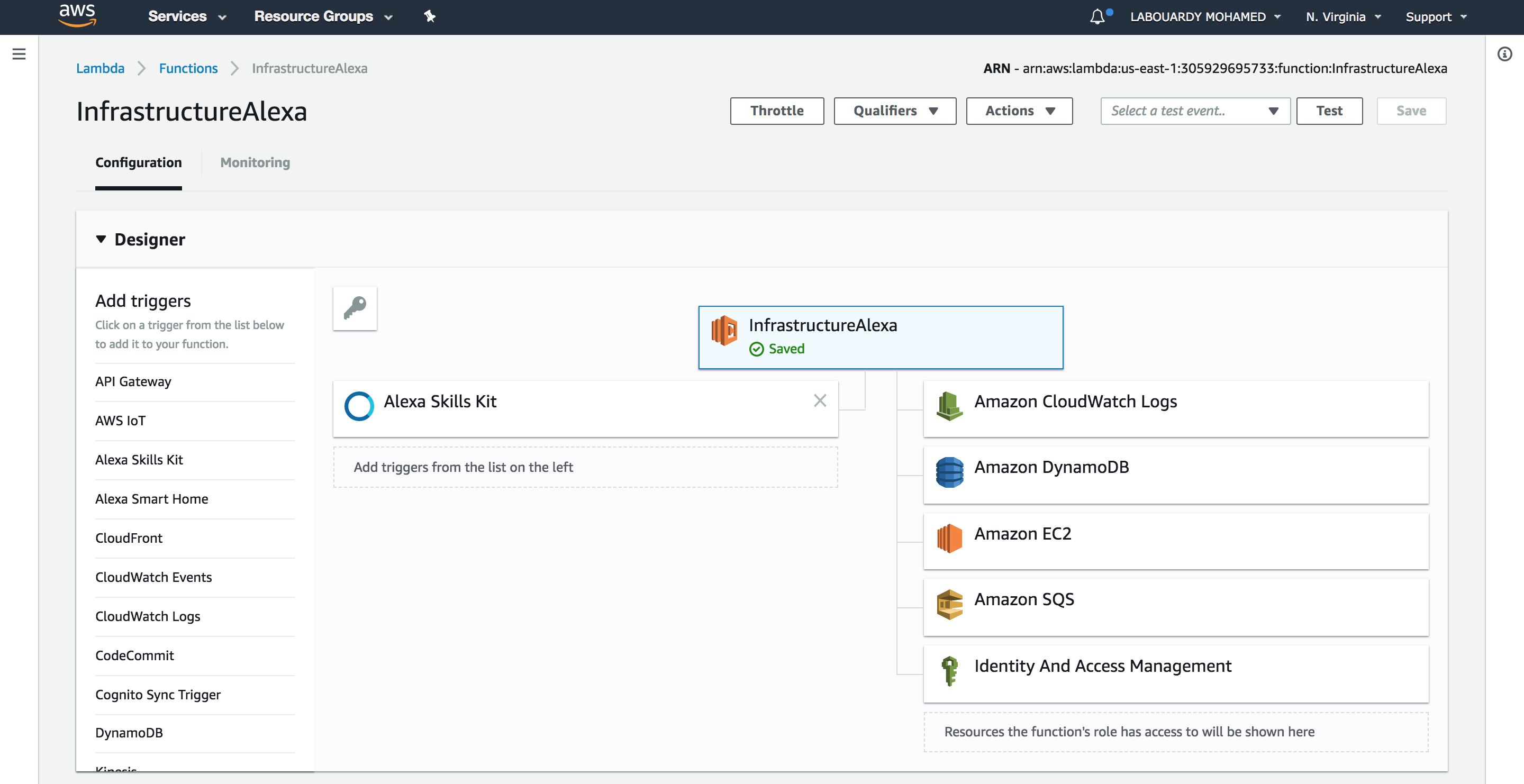

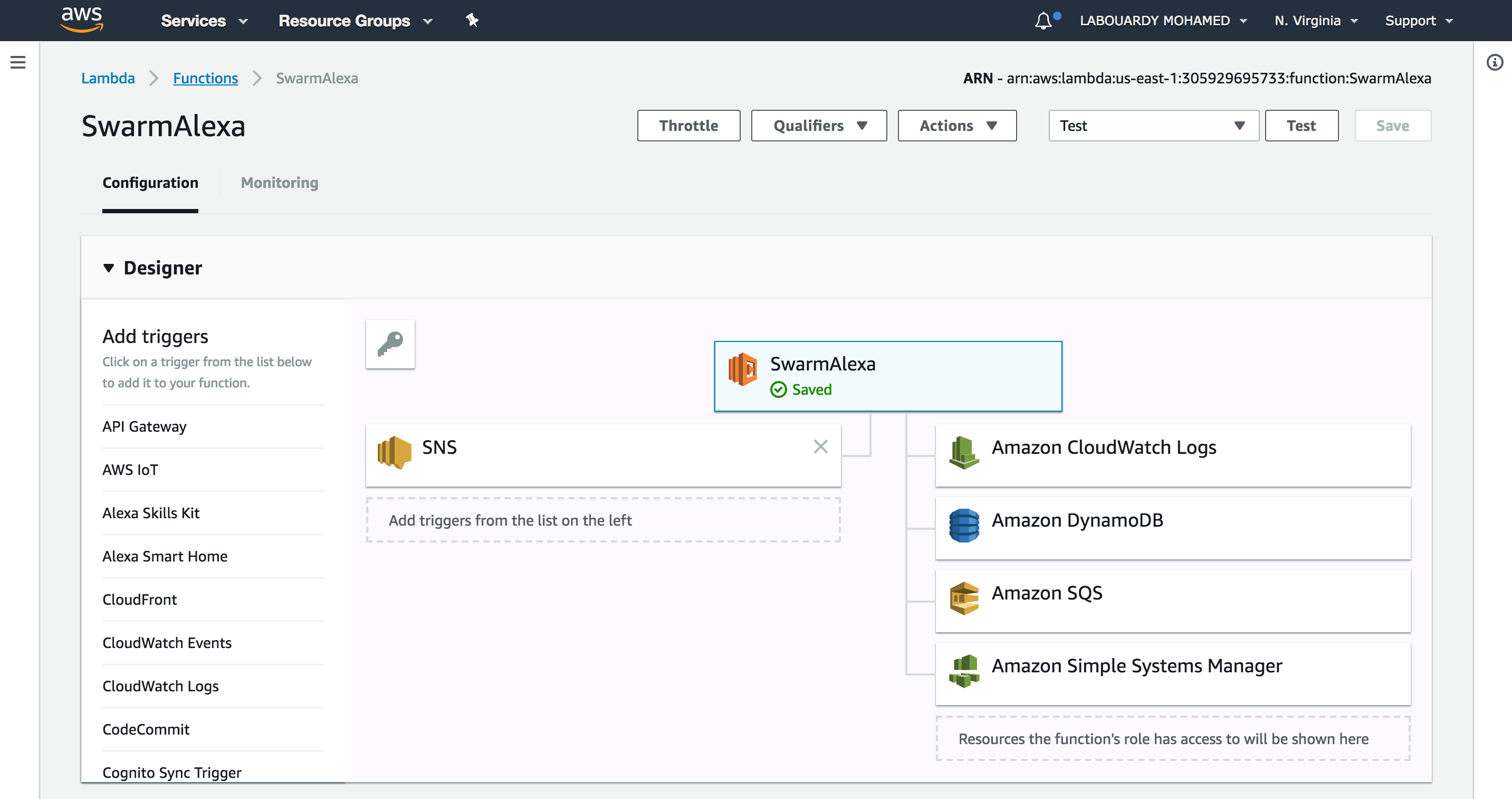

The Alexa skill will trigger a Lambda function for intent fulfilment:

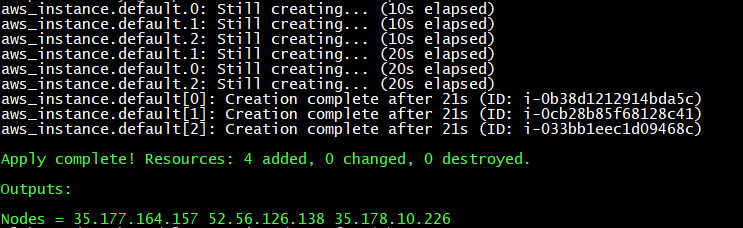

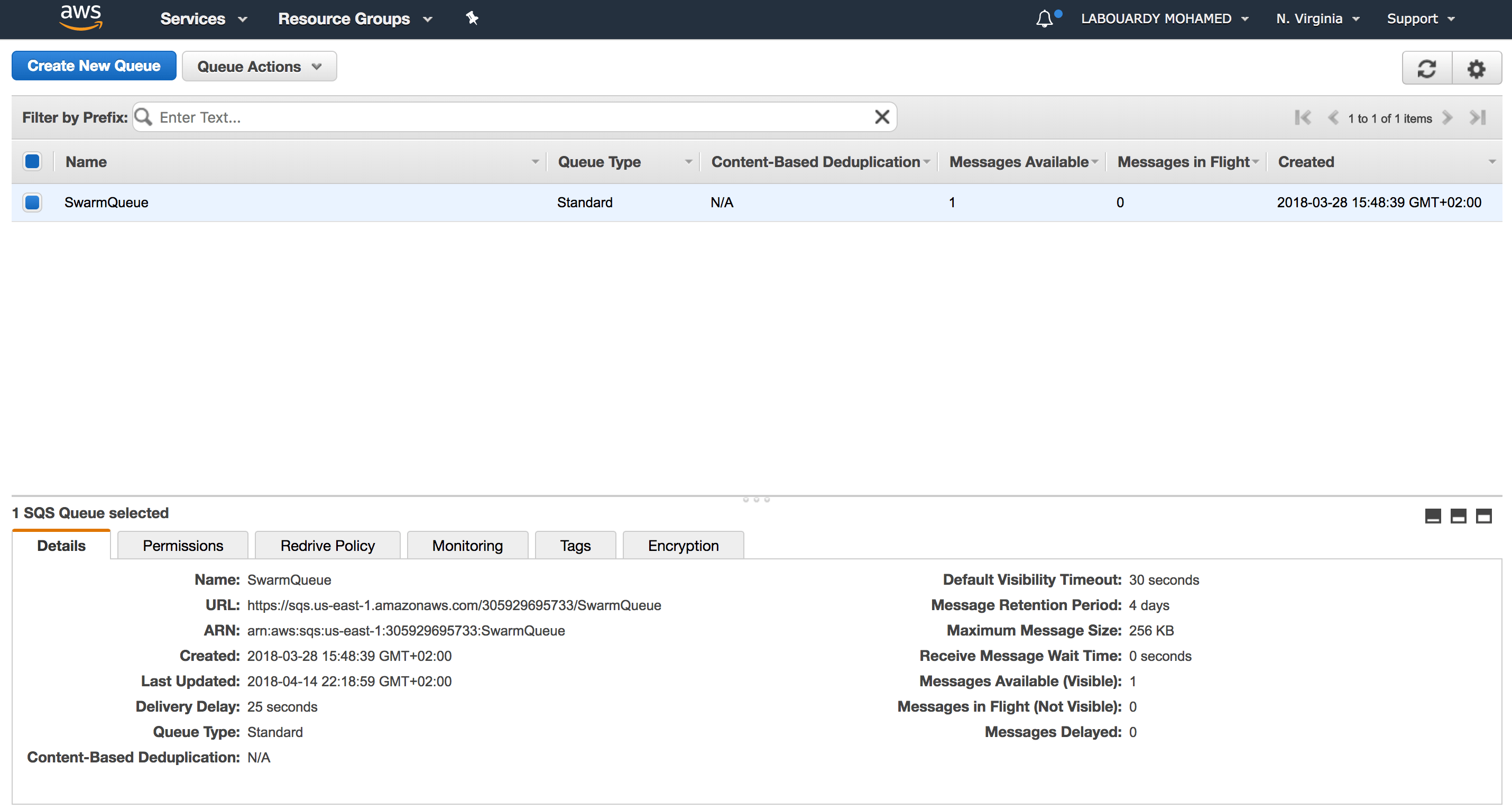

The Lambda Function will use the AWS EC2 API to deploy a fleet of EC2 instances from an AMI with Docker CE preinstalled (I used Packer to bake the AMI to reduce the cold-start of the instances). Then, push the cluster IP addresses to a SQS:

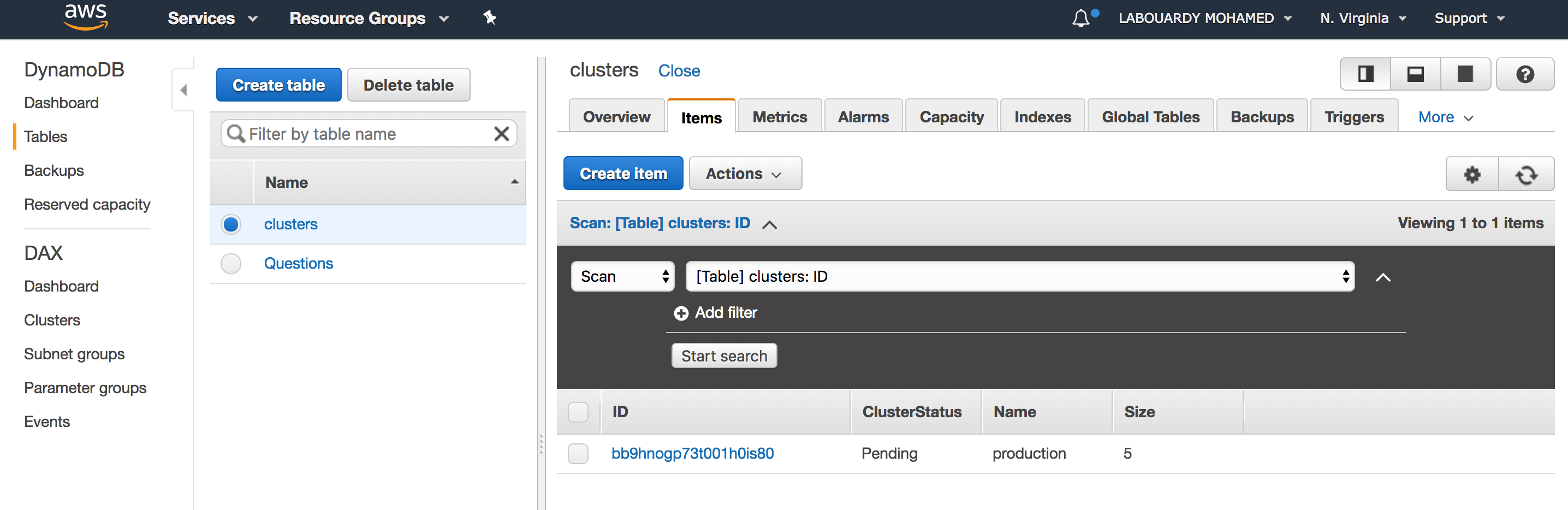

Next, the function will insert a new item to a DynamoDB table with the current state of the cluster:

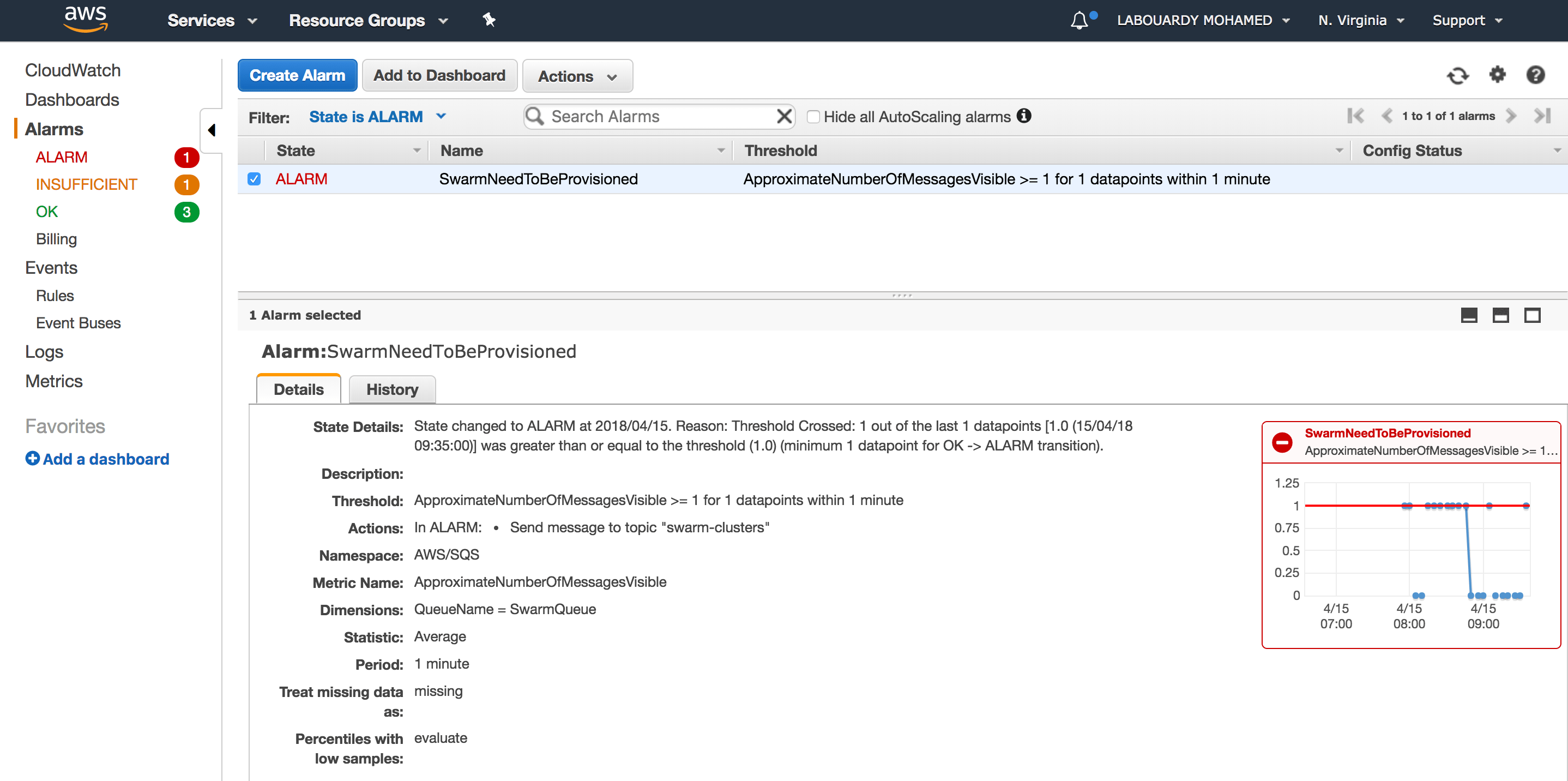

Once the SQS received the message, a CloudWatch alarm (it monitors the ApproximateNumberOfMessagesVisible parameter) will be triggered and as a result it will publish a message to an SNS topic:

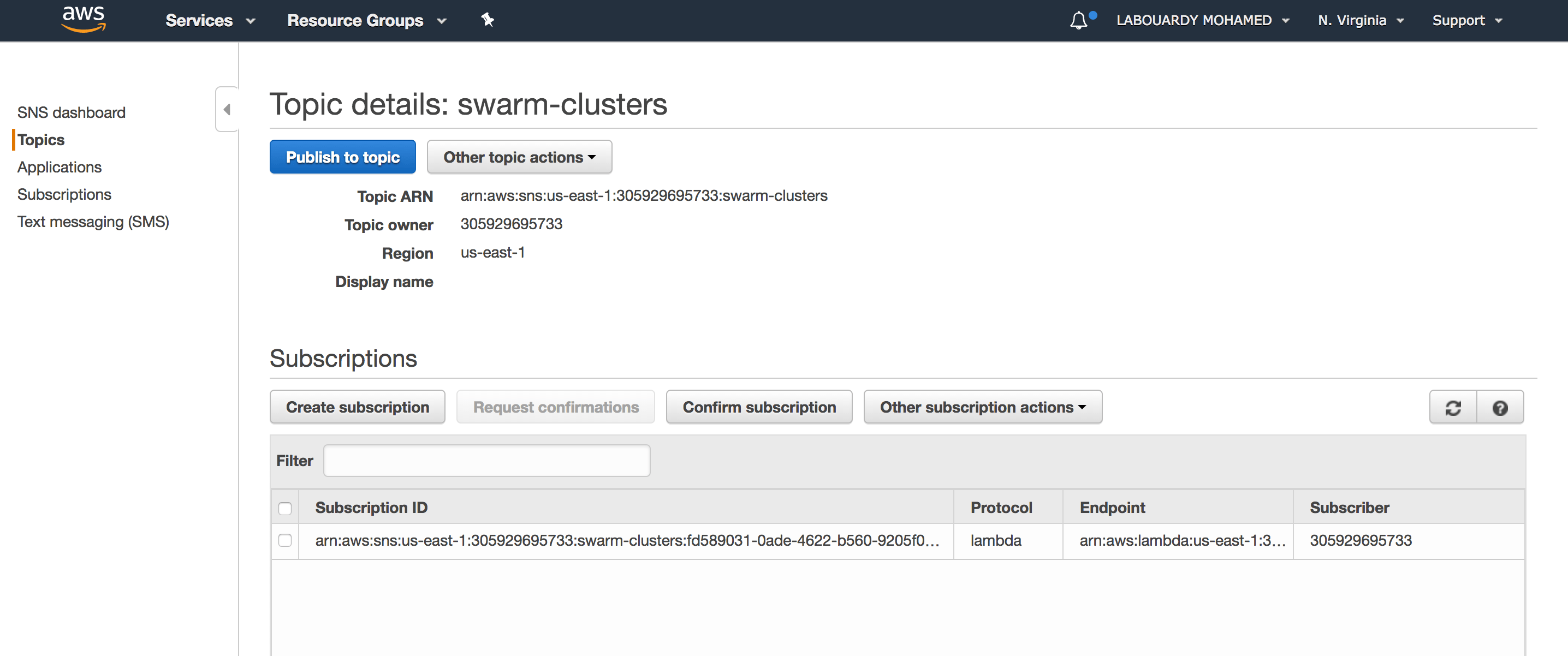

The SNS topic triggers a subscribed Lambda function:

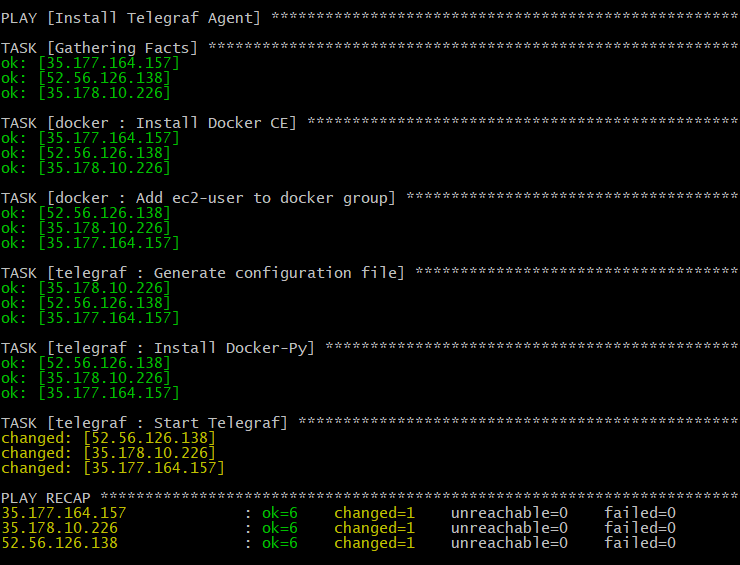

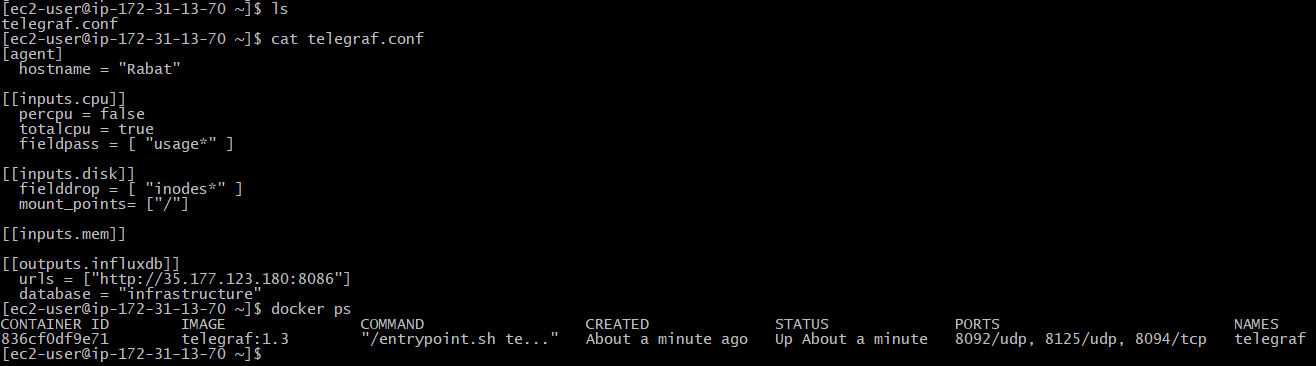

The Lambda function will pull the queue for a new cluster and use the AWS System Manager API to provision a Swarm cluster on the fleet of EC2 instances created earlier:

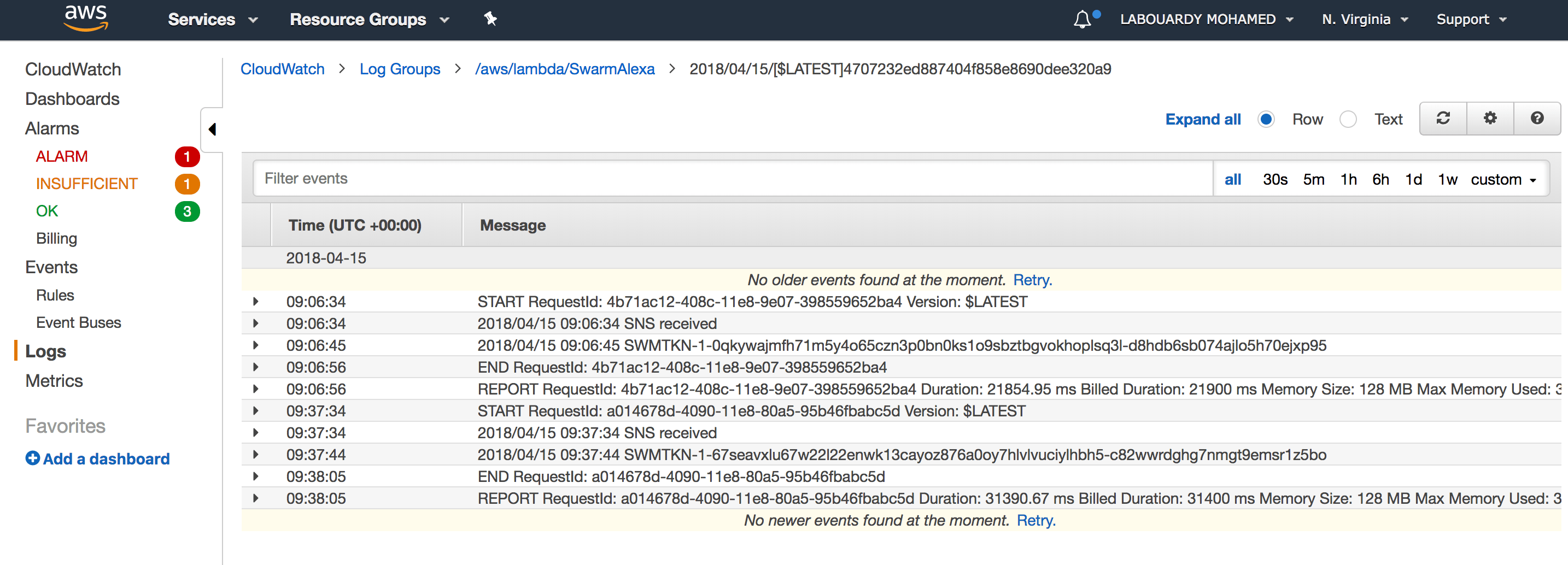

For debugging, the function will output the Swarm Token to CloudWatch:

Finally, it will update the DynamoDB item state from Pending to Done and delete the message from SQS.

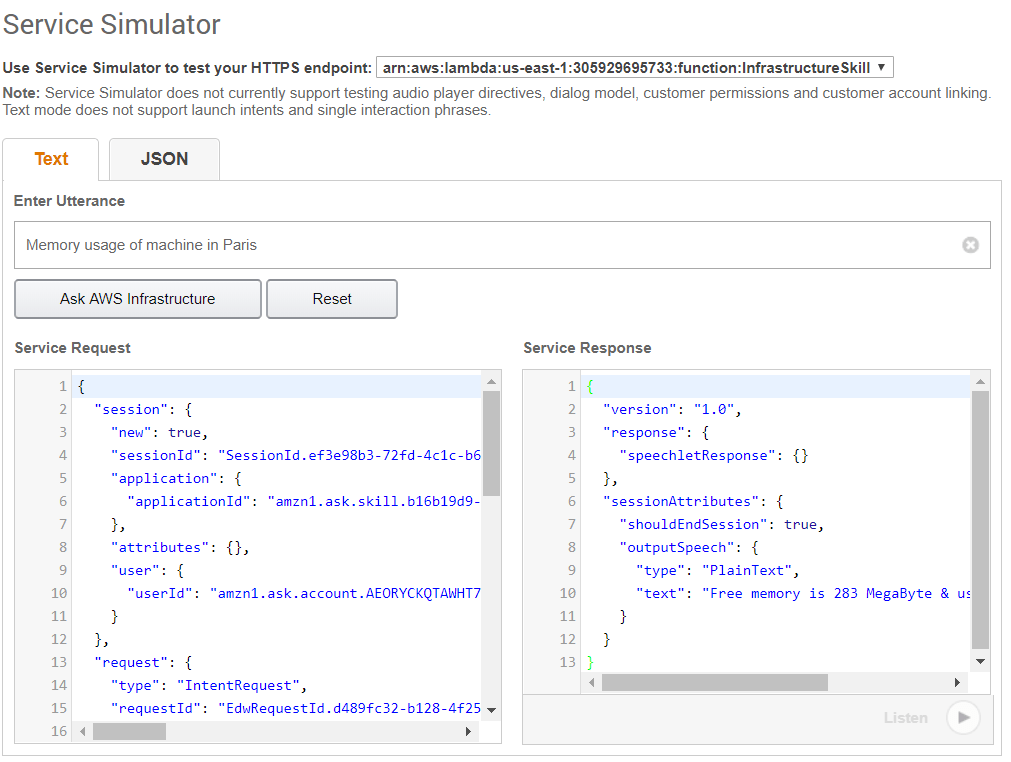

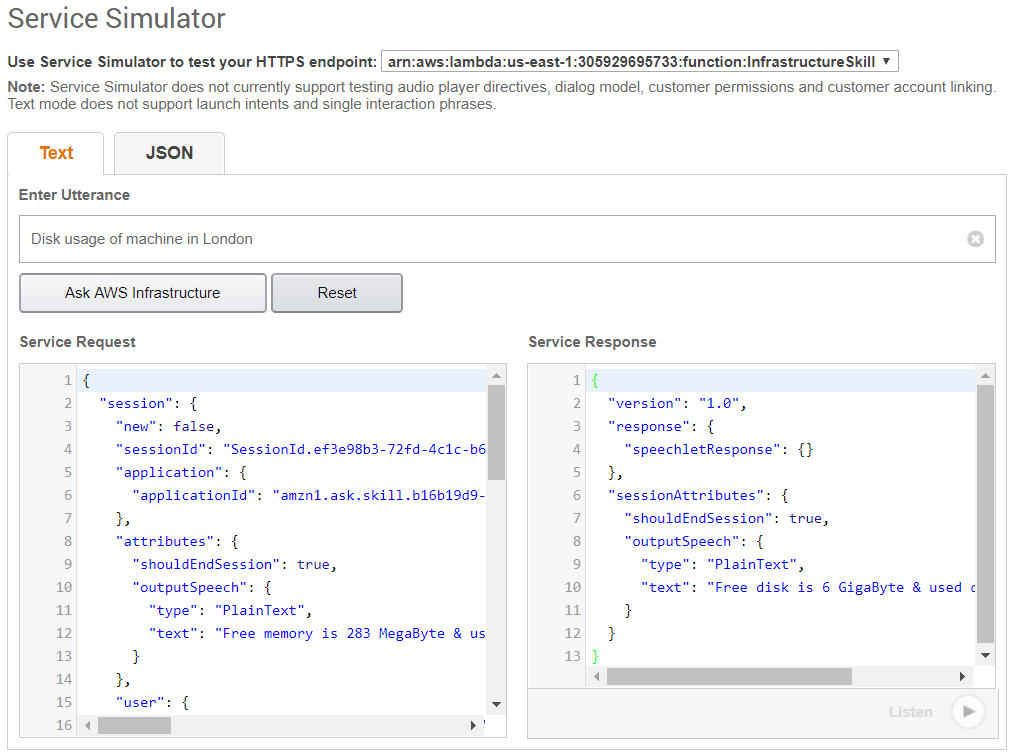

You can test your skill on your Amazon Echo, Echo Dot, or any Alexa device by saying, “Alexa, open Docker”

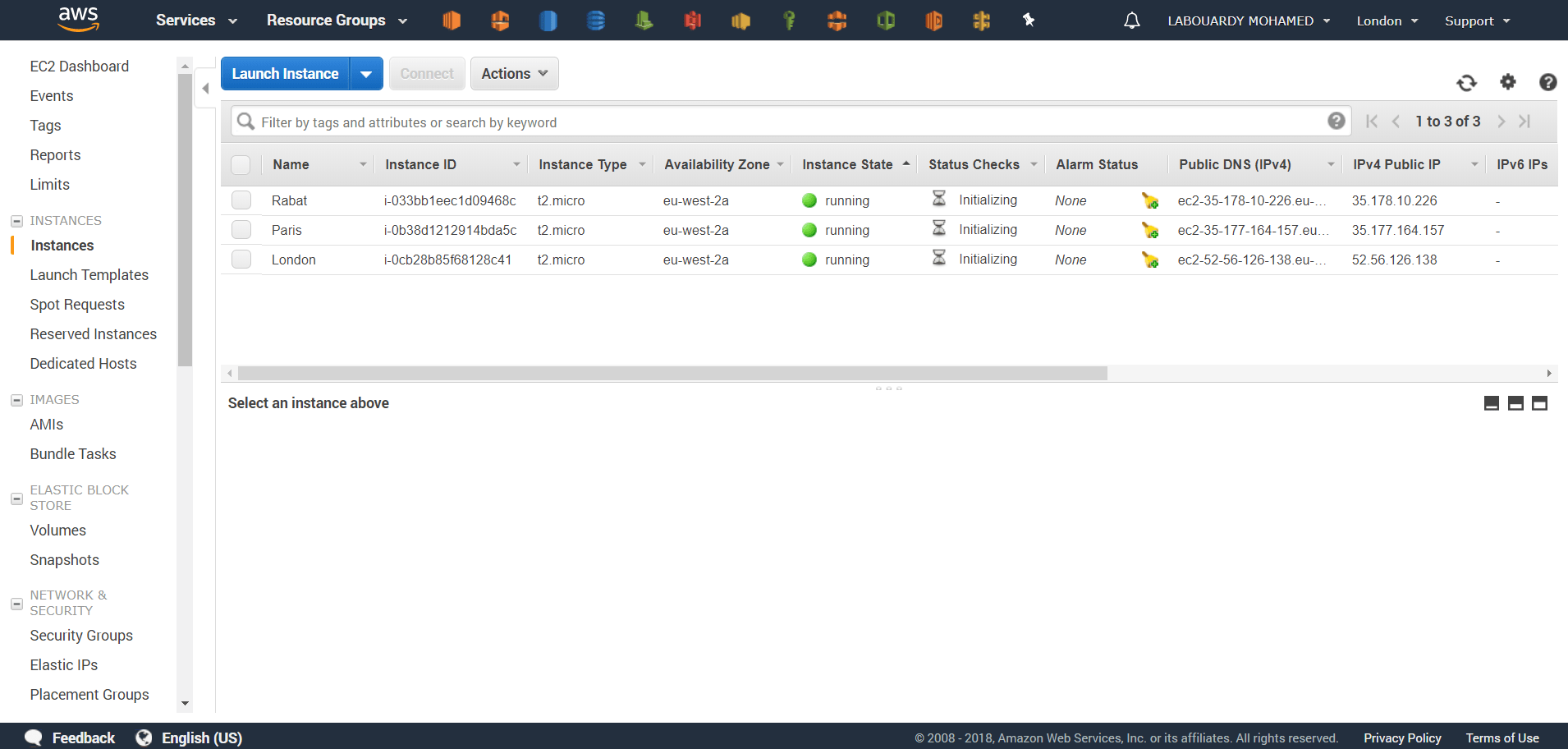

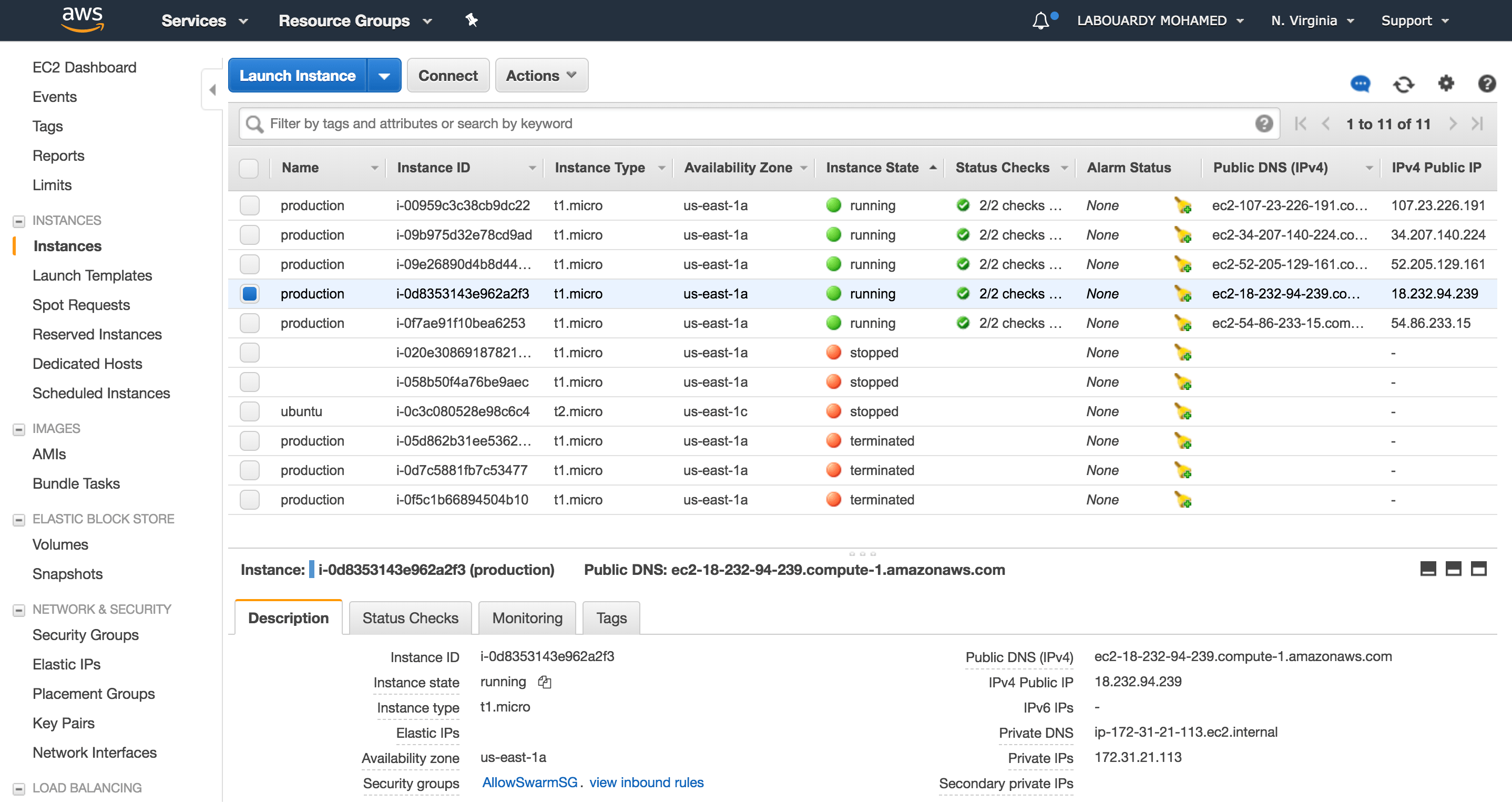

At the end of the workflow described above, a Swarm cluster will be created:

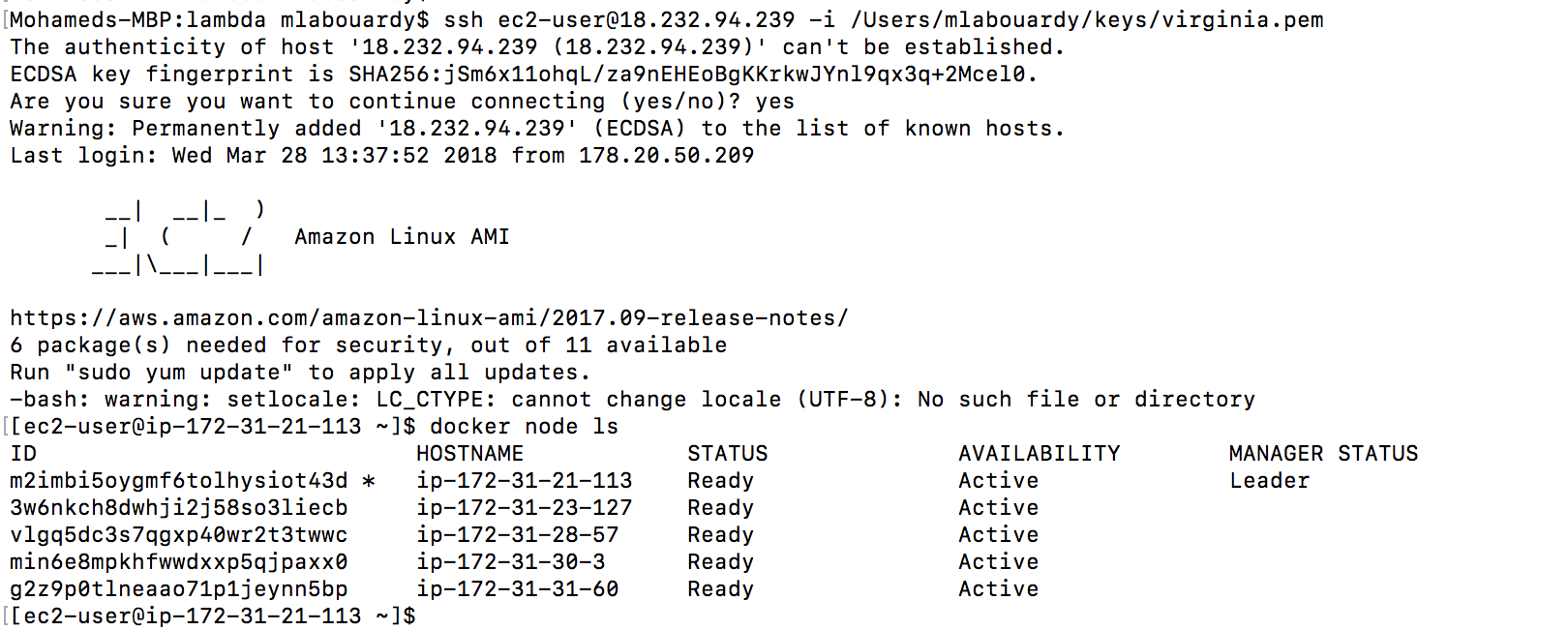

At this point you can see your Swarm status by firing the following command as shown below:

Improvements & Limitations:

- Lambda execution timeout if the cluster size is huge. You can use a Master Lambda function to spawn child Lambda.

- CloudWatch & SNS parts can be deleted if SQS is supported as Lambda event source (AWS PLEAAASE !). DynamoDB streams or Kinesis streams cannot be used to notify Lambda as I wanted to create some kind of delay for the instances to be fully created before setting up the Swarm cluster. (maybe Simple Workflow Service ?)

- Inject SNS before SQS. SNS can add the message to SQS and trigger the Lambda function. We won’t need CloudWatch Alarm.

- You can improve the Skill by adding new custom intents to deploy Docker containers on the cluster or ask Alexa to deploy the cluster on a VPC …

In-depth details about the skill can be found on my GitHub. Make sure to drop your comments, feedback, or suggestions below — or connect with me directly on Twitter @mlabouardy.